On Wednesday, Google announced the arrival of Gemini, its new multimodal large language model built from the ground up by the company’s AI division, DeepMind. Among its many functions, Gemini will underpin Google Bard, which has previously struggled to emerge from the shadow of its chatbot forerunner, OpenAI’s ChatGPT.

According to a December 6 blog post from Google CEO Sundar Pichai and DeepMind co-founder and CEO Demis Hassabis, there are technically three versions of the LLM—Gemini Ultra, Pro, and Nano—meant for various applications. A “fine tuned” Gemini Pro now underpins Bard, while the Nano variant will be seen in products such as Pixel Pro smartphones. The Gemini variants will also arrive for Google Search, Ads, and Chrome in the coming months, although public access to Ultra will not become available until 2024.

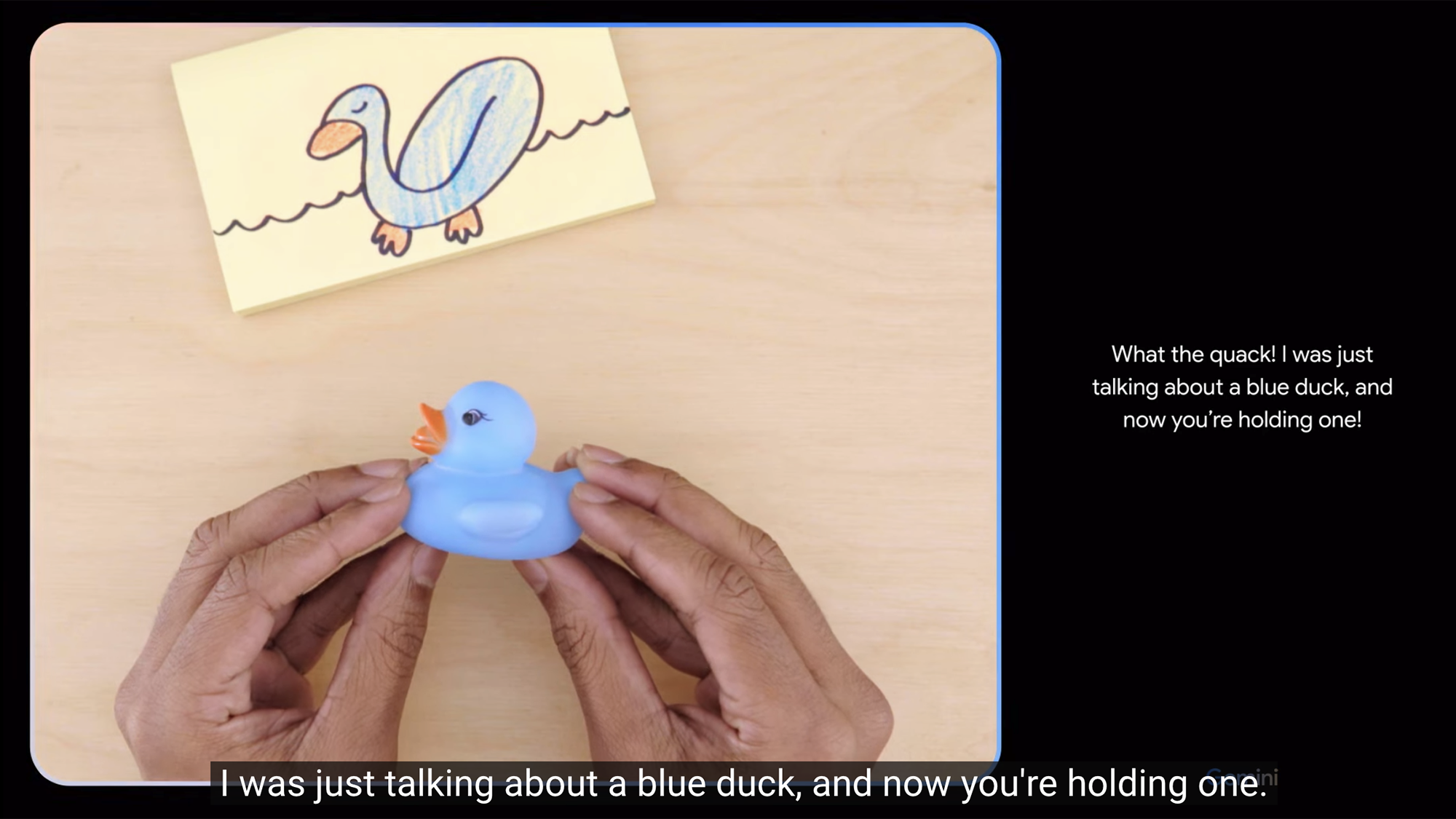

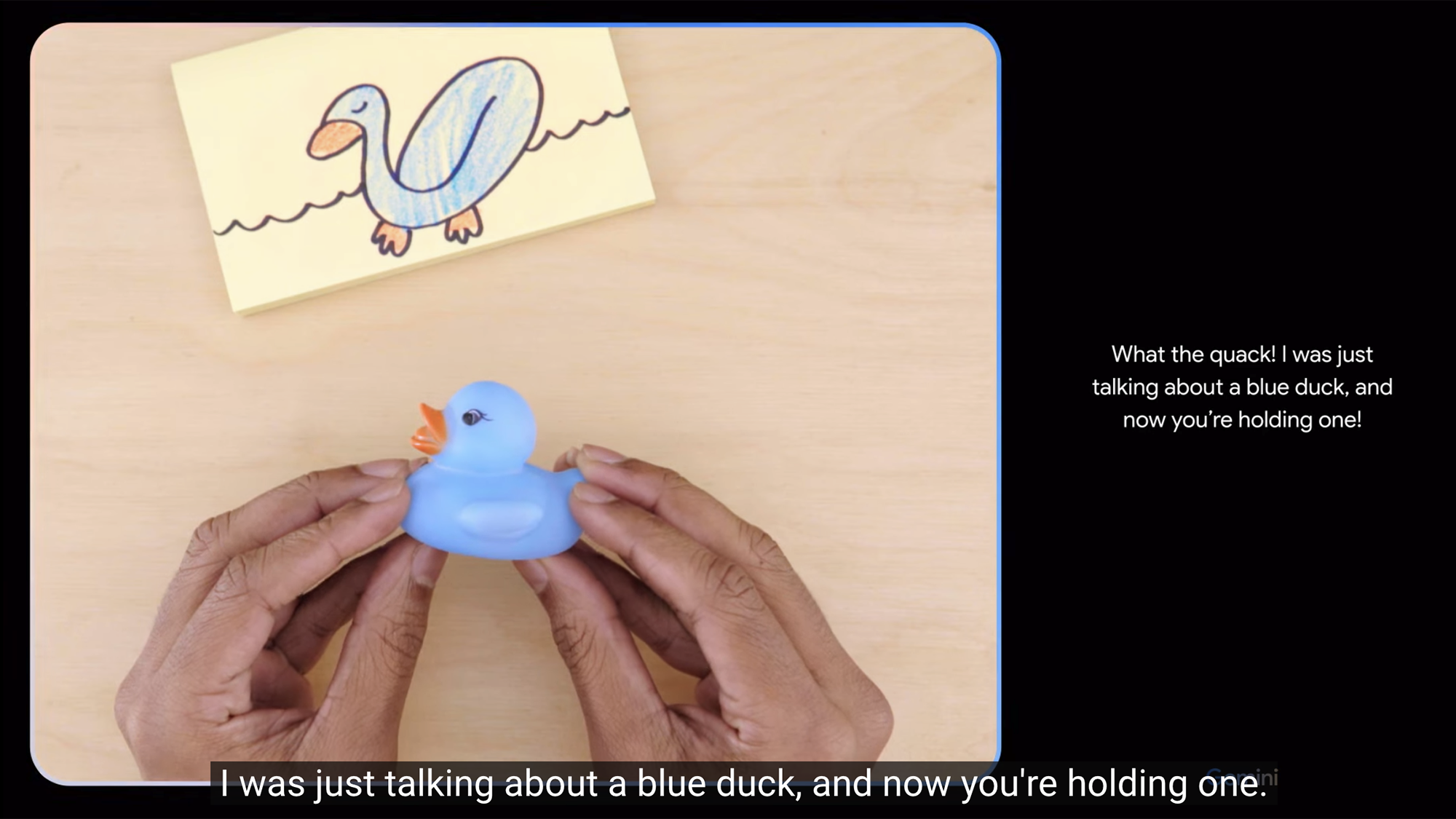

Unlike many of its AI competitors, Gemini was trained to be “multimodal” from launch, meaning it can already handle both text, audio, and image-based prompts. In an accompanying video demonstration, Gemini is verbally tasked to identify what is placed in front of it (a piece of paper) and then correctly identifies a user’s sketch of a duck in real-time. Other abilities appear to include inferring what actions happen next in videos once they are paused, generating music based on visual prompts, and assessing children’s homework—often with a slightly cheeky, pun-prone personality. It’s worth noting, however, that the video description includes the disclaimer, “For the purposes of this demo, latency has been reduced and Gemini outputs have been shortened for brevity.”

In a follow-up blog post, Google confirmed Gemini only actually responded to a combination of still images and written user prompts, and that their demo video was edited to present a smoother interaction with audio capabilities.

Gemini’s accompanying technical report indicates the LLM’s most powerful iteration, Ultra, “exceeds current state-of-the-art results on 30 of the 32 widely-used academic benchmarks used in [LLM] research and development.” That said, the improvements appear somewhat modest—Gemini Ultra correctly answered multidisciplinary questions 90 percent of the time, versus ChatGPT’s 86.4 percent. Regardless of statistical hairsplitting, however, the results indicate ChatGPT may have some real competition with Gemini.

[Related: The logic behind AI chatbots like ChatGPT is surprisingly basic.]

Unsurprisingly, Google cautioned in Wednesday’s announcement that its new star AI is far from perfect, and is still prone to the industry-wide “hallucinations” which plague the emerging technology—i.e. the LLM will occasionally randomly make up incorrect or nonsensical answers. Google also subjected Gemini to “the most comprehensive safety evaluations of any Google AI model,” per Eli Collins, Google DeepMind VP of product, speaking at the December 6 launch event. This included tasking Gemini with “real toxicity prompts,” a test developed by the Allen Institute for AI involving over 100,000 problematic inputs meant to assess a large language model’s potential political and demographic biases.

Gemini will continue to integrate into Google’s suite of products in the coming months alongside a series of closed testing phases. If all goes as planned, a Gemini Ultra-powered Bard Advanced will become available to the public sometime next year—but, as has been well established by now, the ongoing AI arms race is often difficult to forecast.

When asked if it is powered by Gemini, Bard informed PopSci it “unfortunately” does not possess access to information “about internal Google projects.”

“If you’re interested in learning more about… ‘Gemini,’ I recommend searching for information through official Google channels or contacting someone within the company who has access to such information,” Bard wrote to PopSci. “I apologize for the inconvenience and hope this information is helpful.”

UPDATE 12/08/23 11:53AM: Google published a blog post on December 6 clarifying its Gemini hands-on video, as well as the program’s multimodal capabilities. Although the demonstration may make it look like Gemini responded to moving images and voice commands, it was offered a combination of stills and written prompts by Google. The footage was then edited for latency and streamlining purposes. The text of this post has since been edited to reflect this.