In a new study, computer scientists wrote algorithms for robots to arrange blocks into designs that a human would recognize as a car, a turtle, a house, and other things. But the designs weren’t programmed in. Instead, the robots learned from in-person (in-robot?) demonstrations… plus asking questions on a crowd-sourcing website called Mechanical Turk.

The combination of in-person and online, crowd-sourced learning helped robots learn tasks better than in-person demos alone, the researchers found. In addition, the crowd-sourced robot-tutoring was cheaper than hiring the same number of people to demonstrate for the robot. The researchers think that in the far future, crowd-sourcing could help robots learn useful tasks such as setting tables for meals, or loading the dishwasher. (I mean, I wish, right?)

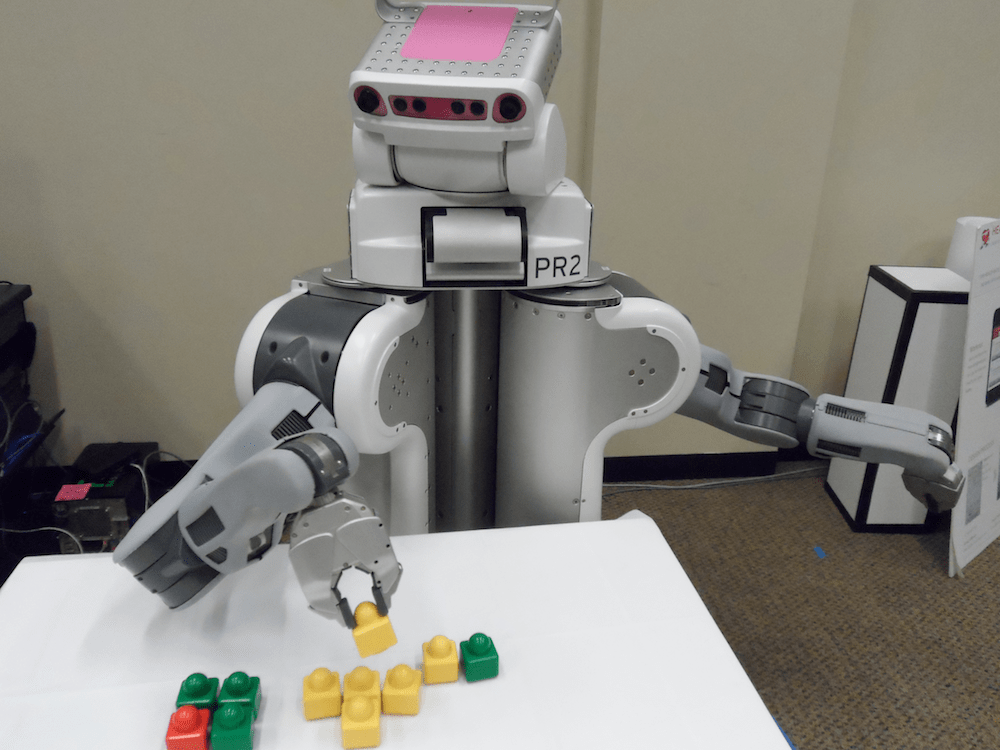

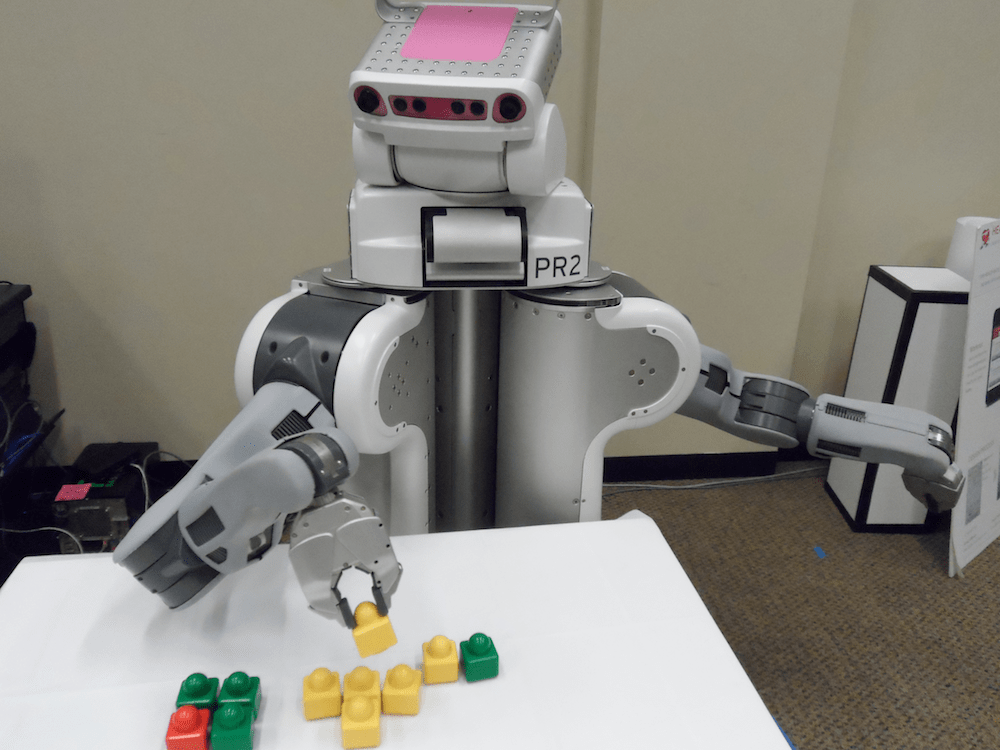

The scientists, a team from the University of Washington, equipped their own Gambit robot with a Kinect depth sensor to help it sense the colors and locations of blocks on a table. Gambits are arms with pincer hands, so they’re able to grasp objects. They’re even able to move chess pieces around a board. The programmers also tried their algorithm on a Willow Garage PR2 robot, about which Popular Science has written extensively.

The robots first got demonstrations of block flowers, fish, snakes, and other things from 14 volunteers in lab. Often, these designs were too difficult for the robots to reproduce. So they also posted questions on Mechanical Turk: How would you make a car (or a person, or a baby bird) with these blocks? As the robots gathered data from hundreds of responses, they began to learn how to make something that a human would register as a “car,” but still would be feasible for them to build. The robots also learned to recognize block patterns as one of the eight things they had learned about, even when some pieces were missing.

All of this is pretty cool, and pretty impressive. You can learn more from a paper the researchers posted online about their work. One bonus: The paper includes pictures of the responses from Mechanical Turkers. It appears some Mechanical Turk users are surprisingly clever at turning a couple dozen blocks into convincing turtles and people. Others are surprisingly bad at the task. Luckily, the University of Washington algorithm had a way of weeding out the poor designs. It asked Mechanical Turkers to rate each others’ designs, so it knew which ones were good turtles and which ones were bad ones.

The researchers presented the results of their work earlier this month, at a robotics conference hosted by the Institute of Electrical and Electronics Engineers.