Every morning, at about 8 a.m., Anthony Levandowski walks out of his house in Berkeley and folds his six-foot-six-inch frame into the driver’s seat of his white Lexus. Levandowski is embarking on his daily commute to work. It’s the most ordinary, familiar moment there is. Most of us perform this ritual five times a week, 50 weeks out of the year. Levandowski’s commute, however, is decidedly different. He’s got a chauffeur, and it’s a robot.

Levandowski backs out of his suburban driveway in the usual manner. By the time he points his Google self-driving car down the street, it has used its GPS and other sensors to determine its location in the world. On the dashboard, right in front of the windshield, is a low-profile heads-up display. manual, it reads, in sober sans serif font, white on black. But the moment Levandowski enters the freeway ramp near his house, a colorful graphic appears. It’s a schematic view of the road: two solid white vertical lines marking the boundaries of the highway and three dashed lines dividing it into four lanes. The message now reads go to autodrive lane; there are two on the far side of the freeway, shown in green on the schematic. Levandowski’s car and those around him are represented by little white squares. The graphics are reminiscent of Pong. But the game play? Pure Frogger.

There are two buttons on Levandowski’s steering wheel, off and on, and after merging into an auto-drive lane, he hits on with his thumb. A dulcet female voice marks the moment by enunciating the words auto driving with textbook precision. And with that, Levandowski has handed off control of his vehicle to software named Google Chauffeur. He takes his feet off the pedals and puts his hands in his lap. The car’s computer is now driving him to work. Self-driving cars have been around in one form or another since the 1970s, but three DARPA Grand Challenges, in 2004, 2005, and 2007, jump-started the field. Grand Challenge alumni now populate self-driving laboratories worldwide. It’s not just Google that’s developing the technology, but also most of the major car manufacturers: Audi, Volkswagen, Toyota, GM, Volvo, BMW, Nissan. Arguably the most important outcome of the DARPA field trials was the development of a robust and reliable laser range finder. It’s the all-seeing eye mounted on top of Levandowski’s car, and it’s used by virtually every other experimental self-driving system ever built.

This year will mark another key milestone in self-driving technology. The National Highway Traffic Safety Administration (NHTSA) is widely expected to announce standards and mandates for car-borne beacons that will broadcast location information to other vehicles on the road. The beacons will warn drivers when a collision seems imminent—when the car ahead breaks hard, for example, or another vehicle swerves erratically into traffic. Automakers may then use this information to take the next step: program automated responses.

Automatic driving is a fundamentally different experience than driving myself. when I arrive at work, I’m ready. Levandoswki’s commute inside of his Google self-driving car is 45 miles long, and if Chauffeur were perfect, he might use the time napping in the backseat. In reality, Levandowski has to stay awake and behind the wheel, because when Chauffeur encounters a situation in which it’s slightly unsure of itself, it asks him to retake control. Following Google policy, Levandowski drives through residential roads and surface streets himself, while Chauffeur drives the freeways. Still, it’s a lot better than driving the whole way. Levandowski has his hands on the wheel for just 14 minutes of his hour-long commute: at the very beginning, at the very end, and during the tricky freeway interchanges on the San Mateo Bridge. The rest of the time, he can relax. “Automatic driving is a fundamentally different experience than driving myself,” he told automotive engineers attending the 2012 SAE International conference. “When I arrive at work, I’m ready. I’m just fresh.”

Levandowski works at Google’s headquarters in Mountain View, California. He’s the business lead of Google’s self-driving-car project, an initiative that the company has been developing for the better part of a decade. Google has a small fleet of driverless cars now plying public roads. They are test vehicles, but they are also simply doing their job: ferrying Google employees back and forth from work. Commuters in Silicon Valley report seeing one of the cars—easily identifiable by a spinning turret mounted on the roof—an average of once an hour. Google itself reports that collectively the cars have driven more than 500,000 miles without crashing. At a ceremony at Google headquarters last year, where Governor Jerry Brown signed California’s self-driving-car bill into law, Google co-founder Sergey Brin said “you can count on one hand the number of years until ordinary people can experience this.” In other words, a self-driving car will be parked on a street near you by 2018. Yet releasing a car will require more than a website and a “click here to download” button. For Chauffeur to make it to your driveway, it will have to run a gauntlet: Chauffeur must navigate a path through a skeptical Detroit, a litigious society, and a host of technical catch-22’s.

* * *

Right now, Chauffeur is undergoing what’s known in Silicon Valley as a closed beta test. In the language particular to Google, the researchers are “dogfooding” the car—driving to work each morning in the same way that Levandowski does. It’s not so much a perk as it is a product test. Google needs to put the car in the hands of ordinary drivers in order to test the user experience. The company also wants to prove—in a statistical, actuarial sense—that the auto-drive function is safe: not perfect, not crash-proof, but safer than a competent human driver. “We have a saying here at Google,” says Levandowski. “In God we trust—all others must bring data.”

Currently, the data reveal that so-called release versions of Chauffeur will, on average, travel 36,000 miles before making a mistake severe enough to require driver intervention. A mistake doesn’t mean a crash—it just means that Chauffeur misinterprets what it sees. For example, it might mistake a parked truck for a small building or a mailbox for a child standing by the side of the road. It’s scary, but it’s not the same thing as an accident.

The software also performs hundreds of diagnostic checks a second. Glitches occur about every 300 miles. This spring, Chris Urmson, the director of Google’s self-driving-car project, told a government audience in Washington, D.C., that the vast majority of those are nothing to worry about. “We’ve set the bar incredibly low,” he says. For the errors worrisome enough to require human hands back on the wheel, Google’s crew of young testers have been trained in extreme driving techniques—including emergency braking, high-speed lane changes, and preventing and maneuvering through uncontrolled slides—just in case.

The best way to execute that robot- to-human hand-off remains an open question. How many seconds of warning should Chauffeur provide before giving back the controls? The driver would need a bit of time to gather situational awareness, to put down that coffee or phone, and refocus. “It could be 20 seconds; it could be 10 seconds,” suggests Levandowski. The actual number, he says, will be “based on user studies and facts, as opposed to, ‘We couldn’t get it working and therefore decided to put a one-second [hand-off] time out there.’?”

So far, Chauffeur has a clean driving record. There has been only one reported accident that can conceivably be blamed on Google. A self-driving car near Google’s headquarters rear-ended another Prius with enough force to push it forward and impact another two cars, falling-dominoes style. The incident took place two years ago—the Stone Age, in the foreshortened timelines of software development—and, according to Google spokespeople, the car was not in self-driving mode at the time, so the accident wasn’t Chauffeur’s fault. It was due to ordinary human error.

Human drivers get into an accident of one sort or another an average of once every 500,000 miles in the U.S. Accidents that cause injuries are even rarer, occurring about once every 1.3 million miles. And a fatality? Every 90 million miles. Considering that the Google self-driving car program has already clocked half a million miles, the argument could be made that Google Chauffeur is already as safe as the average human driver. It’s not an argument Google makes to the public because Levandowski says the system hasn’t encountered enough challenging situations in its real-world commutes. “We can speculate; we have models, [but] we don’t actually know the value of the technology to society,” he says—”yet.”

* * *

Google has been uncommonly secretive about its self-driving-car program. Though it began in 2009, the company first announced the project in a blog post a year later. Detroit was not amused.

The attack came from Chrysler, the smallest of Detroit’s Big Three automakers, in the form of a television commercial for the new Dodge Charger. In the ad, the Charger is traveling through a long gloomy tunnel, the camera tracking with it. A baritone voice speaks: “Hands-free driving, cars that park themselves, an unmanned car driven by a search-engine company.” The voice-over is monotone, lifeless, ominous. “We’ve seen that movie,” the voice intones. “It ends with robots harvesting our bodies for energy.”

Google is still not saying much to reporters (including this one) about its plans, but since it was accused of being the bad guy in a real-life Matrix, the company has made a concerted effort to reach out to potential partners. Google lobbyists have made the rounds with legislators in Washington. Its engineers have made pilgrimages to Detroit and abroad. And its data experts have been talking with some of the big insurance companies. They’re making clear tracks in a big push.

A year after the Dodge commercial aired, Levandowski showed up in Detroit as the keynote speaker at the SAE’s annual shindig. He came to Motor City bearing an olive branch: the fruit of several years of intensive research for them to taste, even take for themselves. Google wants to make “available to the rest of the auto industry all of the building blocks that we ourselves use,” he said and then ticked off the goodies—”the Android operating system, search, voice, social, maps, navigation, even Chauffeur.” Instead of rebuilding a whole operating system from scratch, he said, automakers should focus on making the user experience their own.

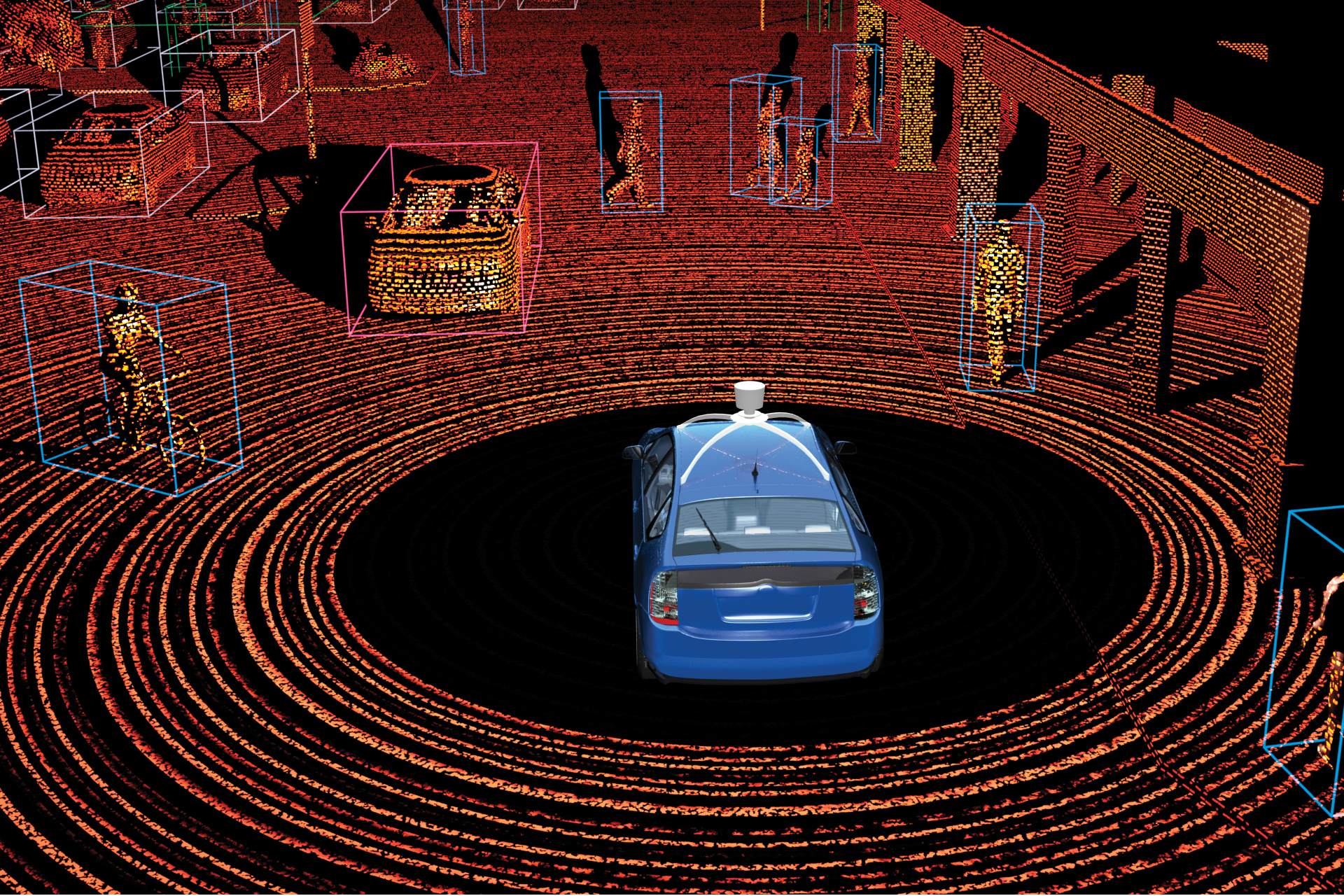

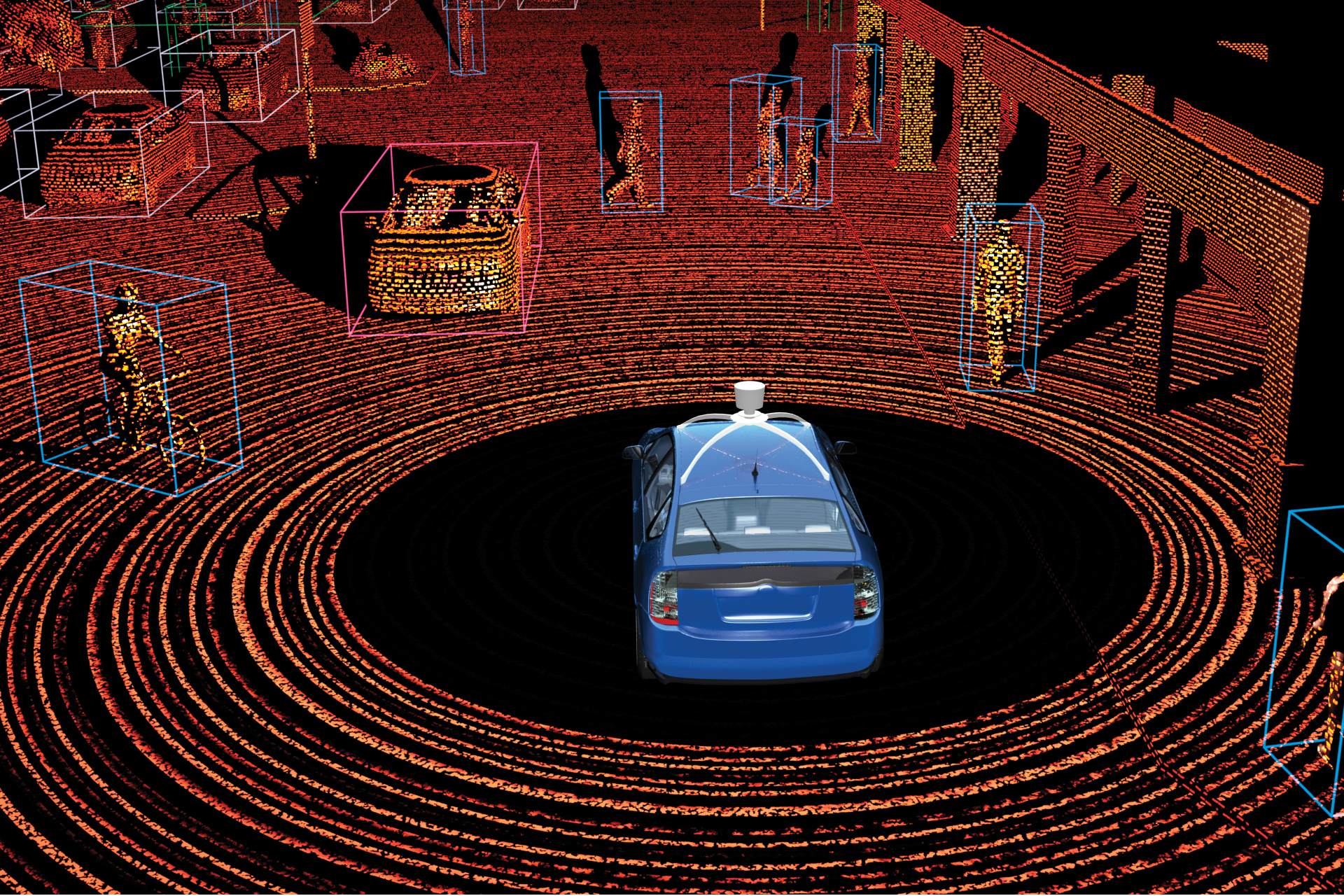

No one talks about the actual terms of the deal—negotiations with individual car companies were held behind closed doors—but it shouldn’t surprise anyone if Google is proposing to give away the software. For the car companies, the real cost of implementing the technology would be in the specialized peripheral that Chauffeur needs to run: the lidar. The acronym stands for light detection and ranging, and it works on the same principle that radar and sonar do—but today’s most advanced lidar is much more accurate, generating up to 1.3 million voxels per second. (A voxel is like a pixel but represents a point in space instead of on a two-dimensional screen.) Group a million or so voxels together and you have a point-cloud: a 3-D model mapped at a 1:1 scale and accurate down to the centimeter. But at $75,000 to $85,000 each, Google’s lidar costs more than every other component in the self-driving car combined, including the car itself.

A grizzled maverick of an engineer named David Hall designed the lidar that Google uses. His company, Velodyne, makes a unit that packs 64 lasers in a turret that typically rotates at 600 rpm, continuously strafing the landscape with 64 separate beams. “For the autonomous vehicle, I’m kind of the only thing that works,” Hall says.

Industry scuttlebutt has it that Ford is giving Google the most serious consideration. Hall confirms that a major automaker recently summoned him to its headquarters to ask whether he could make a next-generation lidar—a ruggedized, standardized automotive component. The company wanted a design that it could hide (perhaps behind the windshield) that would wholesale for no more than $1,000, and it wanted a prototype immediately. If it liked what it saw, it promised to buy a thousand units—in four years’ time.

Hall rebuffed the offer. “If you look at this from a venture capital point of view,” he says, “that’s just about the stupidest idea anyone’s ever come up with.” Hall is confident that with enough time and resources, he could engineer a $1,000 lidar unit, but why bother? It would be many more years before a self-driving car is brought to market, prompting lidar orders in the hundreds of thousands. The return on the engineering investment might be negative for decades to come.

It’s a catch-22, a classic chicken-and-egg problem: Which will come first, the $100-million lidar order from a car company? Or the $100-million lidar factory by Velodyne or another supplier? One hundred million dollars is an arbitrary figure. The point is that some company somewhere needs to make a massive investment. But who?

Google, to its credit, shows no signs that it’s allowing Detroit to slow it down. Even after returning from what must have been discouraging talks with manufacturers, it has not deviated from the script: Self-driving cars should be achievable in five years. It takes more than five years to engineer a new car from the ground up. If Detroit started designing self-driving cars now around components that actually exist, there’s no way the technology could get to the showroom by 2017. Google is not a car manufacturer. Nor does it intend to be one, Levandowski says. So what’s the plan?

“I don’t think we need to wait 10 years for the next model or body style to come out to build the technology,” he told the SAE audience. “I’m looking forward to the aftermarket seeding this and starting the adoption by customers.” In other words, Google thinks a new generation of bot-rodders may kick things off.

Google won’t say anything more, but since there’s really only one place to turn for the all-important lidar, I ask David Hall what he thinks. Hall has considered releasing a bolt-on self-driving system; he’s even priced it out: $100,000. “It would be for people like my dad,” he says. “They’re not driving very well any more, and they could afford it.” However, without reinventing Chauffeur and the super-high-resolution Google maps that go with it, Hall doesn’t see the point. He imagines talking to potential customers. “What would you say,” he asks. “?’Almost as good as Google’s?’?”

* * *

The other fight is the legal one. It too is filled with catch-22’s. Hall described a PowerPoint presentation containing the automaker’s analysis of self-driving-car technology. “It was about 20 pages long,” he says, “and the last 10 pages were ‘What’s going to happen when we get sued?’?” Detroit doesn’t want to start making self-driving cars without legal clarity. And legal clarity will not arrive until self-driving cars test the law.

Bryant Walker Smith, a civil engineer, lawyer, and Stanford Law School fellow, is the leading expert on how existing law would apply to self-driving cars. His book-length legal analysis has more than 650 footnotes, but the title sums up the situation: “Automated Vehicles Are Probably Legal in the United States.” Probably.

In Smith’s analysis, the legal concept of “driver” goes back to an international agreement called the Geneva Convention on Road Traffic, ratified by Congress in 1950. In those days, many of the world’s drivers still had reins and a whip instead of a wheel and pedals. They drove teams of horses, herds of goats, drifts of sheep. Animals, Smith argues, are autonomous. Thus, in the eyes of the law, an autonomous vehicle is arguably similar to a horse-drawn buggy. And under the Geneva Convention, a basic legal requirement for drivers—whether of animals or of cars—is the same. The driver must have control. Who has control of a driverless car?

For the autonomous vehicle that now drives Levandowski to work, the answer (according to Smith) is logical: the person in the driver’s seat. The Google car doesn’t work without one, as Chauffeur needs to be able to hand back the reins with 10, 20, or maybe even 30 seconds’ notice. In Smith’s analysis, the person behind the wheel satisfies the legal requirement of control—but this theory hasn’t been tested in court.

And even if self-driving cars do not violate an international treaty, myriad state laws imply that the driver must be human. New York’s vehicle code, for example, directs that “no person shall operate a motor vehicle without having at least one hand or, in the case of a physically handicapped person, at least one prosthetic device or aid on the steering mechanism at all times when the motor vehicle is in motion.” Computers don’t have hands. That is a problem. Some states, prodded by Google lobbyists and looking to get ahead of the curve, have made the cars explicitly legal. The doctrine assigns driver-hood to the person either in the driver’s seat or the one who activates the self-driving function. Nevada was the first to adapt the principle into state law: Its DMV even designed special license plates for the vehicles (they have an infinity sign). California, Florida, and, most recently, the District of Columbia have followed suit.

“What’s going to happen, no matter what the law says, is people are going to get sued,” Urmson, the director of Google’s self-driving-car project, allows. But that doesn’t mean the development of potentially lifesaving technology should be halted. “There wasn’t legal protection for the Wright brothers when they made that first plane,” he says. “They made them, they went out there, and society eventually realized its value. “

* * *

The oldest joke in the automotive world is the one about the loose nut between the gas pedal and the steering wheel. But 50 years after Ralph Nader’s Unsafe at Any Speed sparked a revolution in safety engineering, people are finally starting to take the joke seriously. There’s one last hazard to engineer out of the modern car: human error, which according to NHTSA, is the “certain” cause of 81 percent of all car crashes.

Cars kill roughly 32,000 people a year in the U.S., and in 2010, Levandowski’s life partner, Stefanie Olsen, was one of the 2.2 million per year injured. She was nine months pregnant at the time. “My son’s name is Alex, and Alex almost was never born,” says Levandowski. He credits the safety features engineered into the car—a Prius—for saving Alex’s life. But, he muses, “that crash should have never happened.” Technology, he says, should prevent oblivious drivers from causing harm.

Self-driving-car boosters talk about a virtuous circle that starts when human hands leave the wheel. It’s not just safety that improves. Computer control enables cars to drive behind one another, so they travel as a virtual unit. Volvo has perfected a simple auto-drive system called platooning, in which its cars autonomously follow a professional driver. It uses technology that’s already built into every high-end Volvo sold today, plus a communications system. The vehicle-to-vehicle communications standard soon to be announced by NHSTA would, at least in theory, enable all makes and models to platoon. And lidar could eliminate even the need for a lead driver.

In our self-driving future, not only would traffic jams become a thing of the past, every stoplight would also be green.A 2012 IEEE study estimates that widespread adoption of autonomous-driving technology could increase highway capacity fivefold, simply by packing traffic closer together. Peter Stone, an artificial-intelligence expert at the University of Texas at Austin, thinks that intersecting streams of automated traffic will essentially flow through one another, controlled by a new piece of road infrastructure—the computerized intersection manager. Average trip times across a typical city would be dramatically reduced. “And once you have these capabilities,” says Stone, “all kinds of things become possible: dynamic lane reversals, micro-tolling to reduce congestion, autonomous-software agents negotiating the travel route with other agents on a moment-to-moment basis in order to optimize the entire network.” In our self-driving future, not only would traffic jams become a thing of the past, every stoplight would also be green.

In Volvo’s real-world platooning tests, drafting resulted in average fuel savings of 10 to 15 percent—but that, too, is seen as the tip of the iceberg. Wayne Gerdes, the father of “hypermiling,” can nearly double the rated efficiency of cars using fuel-sipping techniques that could be incorporated into auto-driving software. Efficiency could double again as human error is squeezed out of the equation. Volvo’s goal is to eliminate fatalities in models manufactured after 2020, and its newest cars already start driving themselves if they sense imminent danger, either by steering back onto the roadway or braking in anticipation of a crash. Over time, virtual bumpers could gradually replace the rubber-and-steel variety, and automakers could eliminate roll cages, returning the consequent weight savings as even better mileage. The EPA has a new mileage mandate for car manufacturers: They must achieve a fleet-wide average of 54.5 mpg by 2025; autonomous technology could help them get there faster.

NHTSA defines five levels of autonomous-car tech, with level zero being nothing. Level one cars include standard safety features such as ABS brakes, electronic stability control, and adaptive cruise control (ACC). In level two, level-one features like lane centering and ACC tie together and the car begins to drive itself. Level three has the Google-style autopilot. And level four is the holy grail—the car that can drive you home when you’re drunk and then go fetch another six-pack. Already NHTSA has mandated level-one technologies in every new car. Several automakers have systems that approach level two on the test track, and Mercedes appears to be the first to market.

Mercedes offers Distronic Plus with Steering Assist as an option on the 2014 S-class luxury sedans. GM anticipates its Super Cruise system will debut later this decade. Both use a combination of radar and computer vision to center the vehicle in the lane and maintain a safe distance from the car in front of it. But the real engineering challenge is making sure the driver stays alert. “All kinds of problems crop up in real-world testing,” says auto-drive consultant Brad Templeton, who worked with Google on its self-driving-car project for two years. “People start doing all kinds of things they shouldn’t—digging around in the backseat, for example. It freaks everybody out.”

Level-two systems need constant human vigilance and oversight to guard against situations like a deer running into the road; the car must be able to hand back control with no warning. But the temptation for drivers is to simply zone out. So engineers have begun to design countermeasures. Mercedes, for example, requires two hands on the steering wheel at all times. “Everyone’s looking for ways to keep the driver engaged,” says Dan Flores, a spokesman for GM. “As the car gets more and more capable, we want the driver to maintain driving expertise.”

Advocates like to say that there is no technical reason the new Mercedes needs hands on the wheel to steer through a turn. The problem is that even the best radar- and vision-based pedestrian-avoidance systems fail to see the proverbial child running into the road 1 or 2 percent of the time. “Obviously, 99 percent just isn’t good enough; we need 99.99999,” says Templeton. “And what people don’t seem to realize is that the difference between those two numbers is huge. It’s not a one percent difference—it’s an orders of magnitude difference.”

Google is betting that established car manufacturers, working with low-cost radar and camera components, will never adequately bridge that gap. It’s chosen a different technical path, one that uses lidar to leapfrog level two altogether. It believes its level-three system will make cars safe enough for people to daydream while they’re being driven to work. And it’s not stopping there. NHTSA’s former deputy director, Ron Medford, has just signed on as Google’s director of safety for the self-driving-car project. “Google’s main focus and vision,” says Medford, “is for a level-four vehicle.”

Adam Fisher grew up in Silicon Valley and lives in the Bay area, where he writes about technology and travel. This article originally appeared in the October 2013 issue of Popular Science.