The current AI boom demands a lot of computing power. Right now, most of that comes from Nvidia’s GPUs, or graphics processing units—the company supplies somewhere around 90 percent of the AI chip market. In an announcement this week, it aims to extend its dominance with its newly announced next-generation GH200 Grace Hopper Superchip platform.

While most consumers are more likely to think of GPUs as a component of a gaming PC or video games console, they have uses far outside the realms of entertainment. They are designed to perform billions of simple calculations in parallel, a feature that allows them to not only render high definition computer graphics at high frame rates, but that also enables them to mine crypto currencies, crack passwords, and train and run large language models (LLMs) and other forms of generative AI. Really, the name GPU is pretty out of date—they are now incredibly powerful multi-purpose parallel processors.

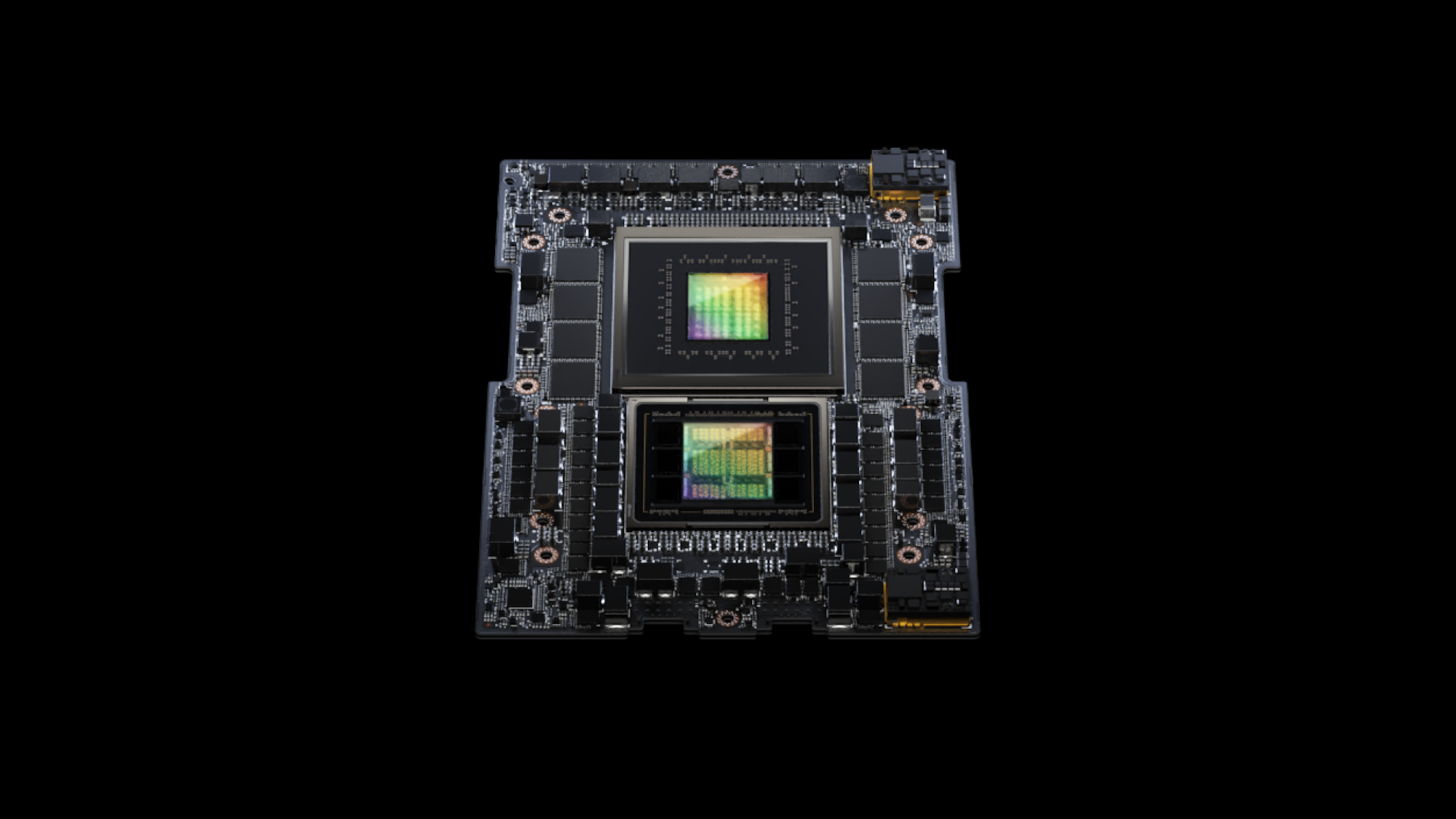

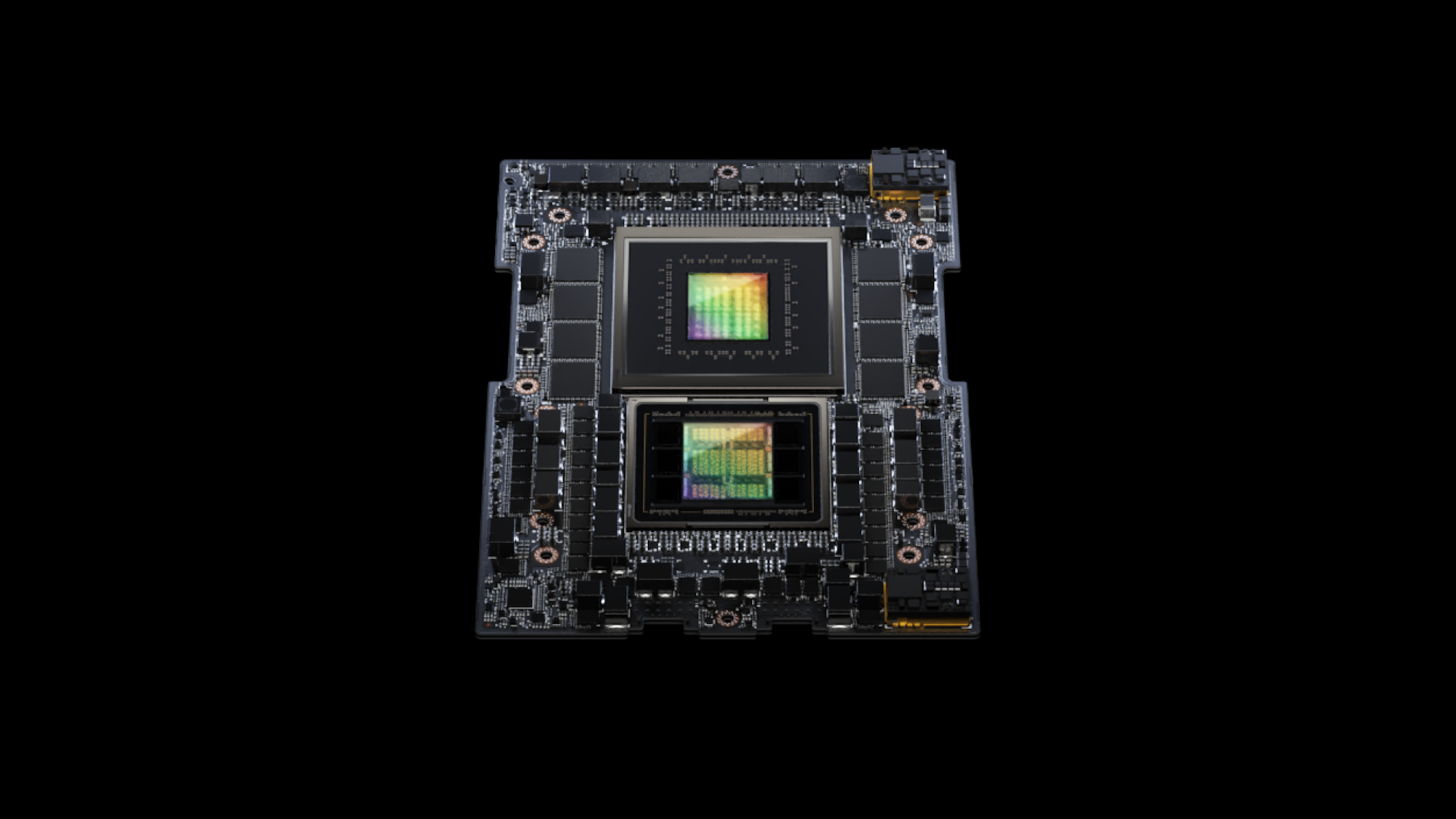

Nvidia announced its next-generation GH200 Grace Hopper Superchip platform this week at SIGGRAPH, a computer graphics conference. The chips, the company explained in a press release, were “created to handle the world’s most complex generative AI workloads, spanning large language models, recommender systems and vector databases.” In other words, they’re designed to do the billions of tiny calculations that these AI systems require as quickly and efficiently as possible.

The GH200 is a successor to the H100, Nvidia’s most powerful (and incredibly in demand) current-generation AI-specific chip. The GH200 will use the same GPU but have 141 GB of memory compared to the 80 GB available on the H100. The GH200 will also be available in a few other configurations, including a dual configuration that combines two GH200s that will provide “3.5x more memory capacity and 3x more bandwidth than the current generation offering.”

[Related: A simple guide to the expansive world of artificial intelligence]

The GH200 is designed for use in data centers, like those operated by Amazon Web Services and Microsoft Azure. “To meet surging demand for generative AI, data centers require accelerated computing platforms with specialized needs,” said Jensen Huang, founder and CEO of NVIDIA in the press release. “The new GH200 Grace Hopper Superchip platform delivers this with exceptional memory technology and bandwidth to improve throughput, the ability to connect GPUs to aggregate performance without compromise, and a server design that can be easily deployed across the entire data center.”

Chips like the GH200 are important for both training and running (or “inferencing”) AI models. When AI developers are creating a new LLM or other AI model, dozens or hundreds of GPUs are used to crunch through the massive amount of training data. Then, once the model is ready, more GPUs are required to run it. The additional memory capacity will allow each GH200 to run larger AI models without needing to split the computing workload up over several different GPUs. Still, for “giant models,” multiple GH200s can be combined with Nvidia NVLink.

Although Nvidia is the most dominant player, it isn’t the only manufacturer making AI chips. AMD recently announced the MI300X chip with 192 GB of memory which will go head to head with the GH200, but it remains to be seen if it will be able to take a significant share of the market. There are also a number of start ups that are making AI chips, like SambaNova, Graphcore, and Tenstorrent. Tech giants such as Google and Amazon have developed their own, but they all likewise trail Nvidia in the market.

Nvidia expects systems built using its GH200 chip to be available in Q2 of next year. It hasn’t yet said how much they will cost, but given that H100s can sell for more than $40,000, it’s unlikely that they will be used in many gaming PCs.