BENEATH A CLEAR SKY and a high sun, a regular human eye can see nearly the entire visible color spectrum. Remove direct sunlight, and a reflection offers only a sliver of the rainbow. But despite darkness distorting our points of reference, we can still determine color in shadow. Many factors influence the hues we detect: our eyes, our brains, the air, objects the light bounces from, Earth’s geometry, and even our visual memories.

Trying to replicate that breadth and sensitivity to color on a computer monitor or printer is both a nightmare and a dream for technologists. And that’s exactly the problem that Roxana Bujack, a staff scientist on the Data Science at Scale Team at Los Alamos National Laboratory in New Mexico, is trying to solve with computations. Math is behind “everything that happens in Photoshop,” Bujack says. “It’s all just matrices and operations, but you see immediately with your eyes what this math does.”

Any answer to this problem would be a far cry from art-class color wheels, or even how most computer screens and printers operate today. Digital work relies on the RGB (red, green, blue) model, which uses a monitor’s light source to adjust the brightness of those three colors to create pigment in pixels. The CMY (cyan, magenta, yellow) model behind printers, meanwhile, is subtractive, removing colors from a white base; if you want to print yellow on card stock, the printer combines the CMY inks to change the lighter background by varying degrees to reach the desired color.

These color models were last updated a century ago. Erwin Schrödinger, of quantum cat fame, along with mathematician Bernhard Riemann and physicist Hermann von Helmholtz, improved RGB. Realizing that the distance between, say, a rosy red and a drab green could not be measured on a straight line, they looked for a more flexible model. They shifted from representations of color in a familiar physical space, what’s known as Euclidean geometry, to the warped world of Riemannian geometry.

Bujack likens their interpretation to an airline service map. Routes aren’t indicated with straight lines, but rather half-moons that reflect Earth’s curvature. “Suppose you take two colors and then pick one that lies on the shortest path between them—say, magenta in the middle, purple to the right, and pink to the left. Then you measure the paths from magenta to purple and from magenta to pink,” she says. “The sum of those two path segments should equal the length of the whole path drawn from magenta to pink, representing the perceived difference between those two colors. It should add up, just like the flight distances from Seattle through Reykjavik to Amsterdam.”

Schrödinger’s 3D model has been the foundation of color theory for more than 100 years. Scientists and developers apply it when seeking to perfect the digital representation of colors on the screens of machines. It helps translate into pixels the ways by which a human eye distinguishes different shades, like the way you’re able to recognize this text as black and the background as white without a blur.

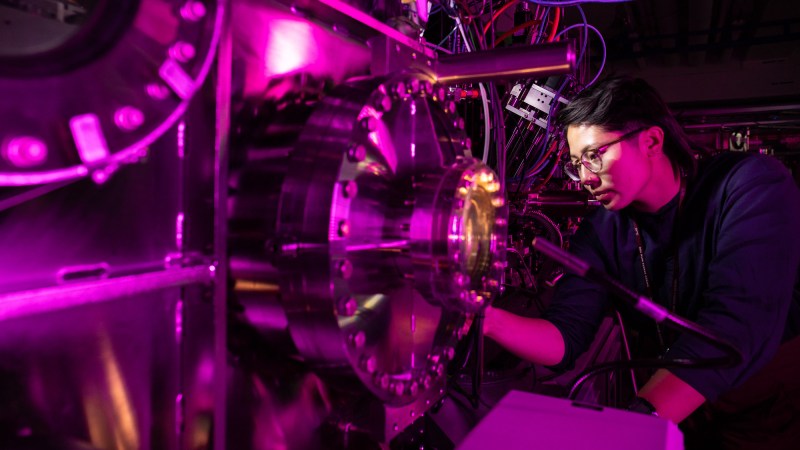

For Bujack, the contours of this space are familiar. She studied mathematics at Leipzig University in Germany, where a course on image processing propelled her into a subset of that field. That’s where she became fascinated by the math that powers programs as diverse as Photoshop and processor-consuming video games. She graduated with a doctorate in computer science in 2014 before landing at the Los Alamos Laboratory, former home to the Manhattan Project.

There, in 2021, her team launched a project with a modest aim: to build algorithms that would design color maps, streamlining the conversion of pigments into digits and date, Bujack says. Illustrators who use Photoshop, Final Cut, and similar programs would benefit; so would the climate scientists, physicists, and weather researchers who represent numerical data with colors.

But they discovered an inconsistency that upended the century-old understanding of the field. “Schrödinger’s work was super-advanced, realizing we need a curved space to describe color space and that this stupid Euclidean space is not working out,” says Bujack. But Schrödinger and his collaborators “did not notice that we need a more robust model.”

Schrödinger’s math doesn’t work, Bujack and her team found, because it fails to predict the correct hues between two colors. On a flight path—halfway between Seattle and Reykjavik, for example—you can calculate how long you have left in your journey. But a midpoint between purple and red does not produce the expected color. The old 3D approach overestimated how different we perceive one shade to be from the next. The Los Alamos team published its findings in April 2022 in the journal Proceedings of the National Academy of Sciences. “As a scientist, I have always dreamed of proving someone famous wrong,” says Bujack. “However, this level of fame exceeds even my wildest dreams.”

But that revelation did not come with an obvious solution. “The current model is not accurate,” says Bujack. “[But] that doesn’t mean we have an off-the-shelf model to replace it.” Because mapping out the new space is “way more laborious” than Schrödinger’s calculations, a mathematical update is “years and years and years in the future.”

The consequences of this discovery, however, could make their way to our computers sooner. Nick Spiker, a color engineer working on IDT Maker, a proprietary digital relighting system, consulted with Bujack after her study was published. He’s since submitted a patent for a product that could help video producers and photographers change the apparent time of day in their videos and pictures.

While it hasn’t led to a replacement model yet, Bujack’s insight will help build something better—for instance, “If you’re watching Netflix or any visual content and you want accurate color,” Spiker says. He adds, “Now this is going to make images appear more realistic than ever before.”

Read more PopSci+ stories.