Those music files — be they MP3, AAC or WMA — that you listen to on your portable music players are pretty crap when it comes to accurate sound reproduction from the original recording. But just how crap they really are wasn’t known until now.

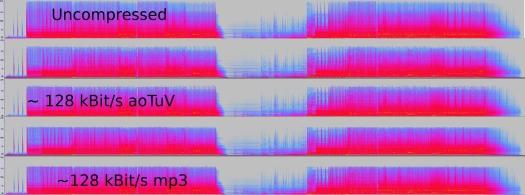

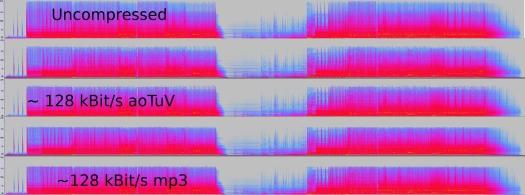

Audio data compression, at its heart, is pretty simple. A piece of software compresses a piece of digital audio data by chopping out redundancy and approximating the audio signal over a discrete period of time. The larger the sample time-period, the less precise the approximation. This is why an MP3 with a high sampling rate (short sample times) is of higher quality than an MP3 with a low sampling rate.

To test if the human ear was accurate enough to discern certain theoretical limits on audio compression algorithms, physicists Jacob N. Oppenheim and Marcelo O. Magnasco at Rockefeller University in New York City played tones to test subjects. The researchers wanted to see if the subjects could differentiate the timing of the tones and any frequency differences between them. The fundamental basis of the research is that almost all audio compression algorithms, such as the MP3 codec, extrapolate the signal based on a linear prediction model, which was developed long before scientists understood the finer details of how the human auditory system worked. This linear model holds that the timing of a sound and the frequency of that sound have specific cut-off limits: that is, at some point two tones are so close together in frequency or in time that a person should not be able to hear a difference. Further, time and frequency are related such that, a higher precision in one axis (say, time) means a corresponding decrease in the precision in the other. If human hearing follows linear rules, we shouldn’t hear a degradation of quality (given high enough sampling rates — we’re not talking some horrible 192kbps rip) between a high-quality file and the original recording.

The experiment was broken up into five tasks that involved subjects listening to a reference tone coupled with a tone that varied from the reference. The tasks tested the following:

1) frequency differences only

2) timing differences only

3) frequency differences with a distracting note

4) timing differences with a distracting note

5) simultaneously determining both frequency and timing differences

I don’t think it will come as a surprise to a lot of audiophiles, but human hearing most certainly does not have a linear response curve. In fact, during Task 5 — what was considered the most complex of the tasks — many of the test subjects could hear differences between tones with up to a factor of 13 more acuity than the linear model predicts. Those who had the most skill at differentiating time and frequency differences between tones were musicians. One, an electronic musician, could differentiate between tones sounded about three milliseconds apart — remarkable because a single period of the tone only lasts 2.27 milliseconds. The same subject didn’t perform as well as others in frequency differentiation. Another professional music was exceptional at frequency differentiation and good at temporal differentiation of the tones.

Even more interesting, the researchers found that composers and conductors had the best overall performance on Task 5, due to the necessity of being able to discern the frequency and timing of many simultaneous notes in an entire symphony orchestra. Finally, the researchers found that temporal acuity — discerning time differences between notes — was much better developed than frequency acuity in most of the test subjects.

So, what does this all mean? The authors plainly state that audio engineers should rethink how they approach audio compression — and possibly jettison the linear models they use to achieve that compression altogether. They also suggest that revisiting audio processing algorithms will improve speech recognition software and could have applications in sonar research or radio astronomy. That’s awesome, and all. But I can’t say I look forward to re-ripping my entire music collection once those codecs become available.