Inside The Largest Simulation Of The Universe Ever Created

A giant supercomputer is making massively detailed models of the cosmos.

Imagine being asked to solve a complex algebra problem that is roughly 95 percent variables and only five percent known values. This is a rough analogy perhaps, but it paints a fairly accurate picture of the task faced by modern cosmologists. The prevailing line of thinking says that the universe is mostly composed of dark matter and dark energy, two mysterious entities that have never been directly observed or measured even though the cosmological math insist that they are real. We can see their perceived effects, but we can’t see them directly–and thus we can’t seen the real structure of our own universe.

And so we make models. Sometime next month, the world’s third-fastest supercomputer –known as Mira–will complete tests of its new upgraded software and begin running the largest cosmological simulations ever performed at Argonne National Laboratory. These simulations are massive, taking in huge amounts of data from the latest generation of high-fidelity sky surveys and crunching it into models of the universe that are larger, higher-resolution, and more statistically accurate than any that have come before. When it’s done, scientists should have some amazing high-quality visualizations of the so-called “cosmic web” that connects the universe as we understand it. And they’ll have the best statistical models of the cosmos that cosmologists have ever seen.

THAT’S COOL, BUT WHY DO WE EVEN NEED TO DO THIS?

Mostly, we do this so we can turn the latest batch of sky survey data into something meaningful. Scientists hope these models will answer some pressing questions about dark matter, dark energy, and the overall structure of the cosmos. Particularly vexing are questions about dark energy, which is purportedly driving the accelerating expansion of the universe and–actually, that’s pretty much all we know about it.

“‘Dark energy’ is just a technical shorthand for saying ‘we have no idea what’s going on.'””Dark energy is confusing because the universe isn’t just expanding–we knew that already–but that expansion is also accelerating, which is very unexpected,” says Salman Habib, a physicist at Argonne National Laboratory and the principal investigator for the Multi-Petaflop Sky Simulation at Argonne. “The cause of this acceleration is what people call ‘dark energy,’ but that’s just a technical shorthand for saying ‘we have no idea what’s going on.'”

These simulations are aimed at shedding some light on this expansion happening all around us. But just as importantly, they are aimed at defining just exactly what is not going on. There are a lot of theories about dark energy; it could be a new kind of field in the universe that we’ve yet to discover, or a characteristic of gravity at large scales that we don’t yet understand. It could be some twist on general relativity that we haven’t thought of. The latest crop of high resolution sky survey data should allow the Argonne team to model very subtle effects of dark energy on the cosmos, thus allowing for a deeper understanding of the nature of dark energy itself. That in turn should help cosmologists rule out possible explanations–or even entire classes of explanations–as they try to zero in on a more perfect theory for how the universe works.

BUT THE UNIVERSE IS INFINITE. HOW CAN YOU SIMULATE THE ENTIRE UNIVERSE?

We don’t, because we can’t. We can’t really define the limits of the universe for these purposes, and computer models by their nature need a set of constraints. But we can simulate larger and larger chunks of the cosmos thanks to regular leaps in computing power and ever-better sky surveys, and from these increasingly larger and higher-resolution simulations we can extrapolate things about the larger universe. That’s important.

Simulations like the ones being run on Mira have been run before, says Katrin Heitmann, another Argonne National Lab researcher and Habib’s co-investigator on the Mira simulation project, “but we are now getting into a regime where we have to be more and more precise.” The next generation of data coming back from the most sophisticated sky survey instruments astronomers have ever created–like the Dark Energy Camera, for instance, which achieved first light just last month–will contain more data (reflecting billions of observed galaxies) and fewer inherent statistical errors. So while previous simulations like the beautifully articulated Millennium Simulation Project have yielded excellent visualizations and models of the distribution of matter across the cosmos, these new and better data sets require a new and better computing architecture to deal with them.

We can build architectures to deal with these new data sets thanks largely to Moore’s Law. Every three years or so, supercomputing on the whole experiences a roughly ten-fold increase in computing power. That enables supercomputing centers like Argonne to construct machines (or upgrade old ones) that can far outperform what was at the very vanguard of the field just a year or two ago. The 10-petaflop (that’s ten quadrillion calculations per second) Mira system is one such example of this, and it’s Mira that will enable Argonne to run the biggest dark matter simulations ever performed.

WHAT WILL THESE SIMULATIONS LOOK LIKE?

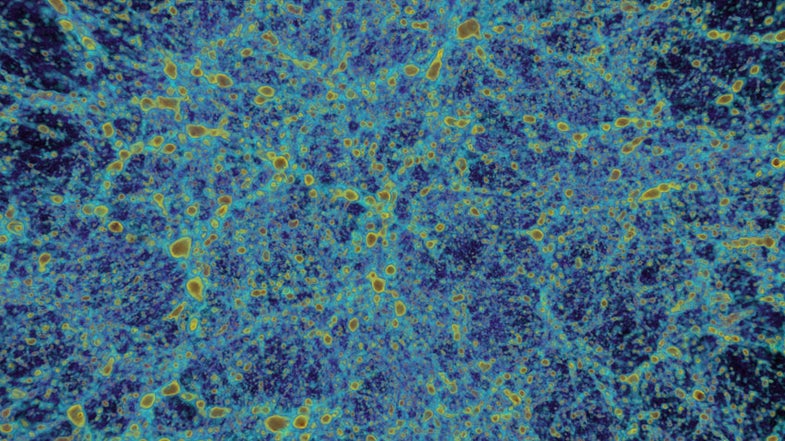

Visually, they’ll look like this:

This visualization comes from some preliminary tests of the new computing architecture, known as HACC (for Hardware/Hybrid Accelerated Cosmology Code–more on this later). And what you are looking at is essentially a 3-D block of the universe and the way matter is distributed throughout it, according to the data gleaned from various sky survey sources.

“What you’re looking at is the cosmic web,” Habib says. “You can see clearly these big voids and these filaments and these clumps. What you’re actually seeing is the matter density. The blobby parts are where the density is highest, and that’s where the galaxies are.” You can’t actually see the galaxies here–this is a representation rather than a true optical model. In the smallest clumps there may be no galaxies at all. In the medium-sized clumps, there could be one or more. The largest clumps represent galaxy clusters where thousands of galaxies might reside.

Simulating Matter Distribution Across The Cosmos (Zoomed)

This is a still frame of the cosmos as we currently see it, but the simulations slated for Mira will build something more like a movie of the universe going back billions of years when the universe was much denser–like a million times denser–than it is now. Astronomers can then watch this movie in extremely high detail to see how the universe developed over time, and thus observe the roles dark matter and especially dark energy had in forming our current universe. And, because it is a computer model, they can then play about with the parameters of this virtual universe to test their theories. Hopefully Mira will prove that some theories still stand up. At the very least, it should prove some theories unlikely.

SO IF EACH SIM IS BIGGER AND BETTER THAN THE LAST ONE, WHAT IS SO SPECIAL ABOUT THIS ONE?

For one, its unprecedented resolution and detail, which we’ve described at length above. But where the future of supercomputer modeling is concerned, the HACC architecture is extremely important as well. HACC was developed from scratch for this project, and it is optimized for Mira. But it was designed so it can be optimized for other supercomputers–and other supercomputer applications–as well, a rarity for a this kind of software.

Why? Every supercomputer is designed a bit differently, and each has its own quirks and idiosyncrasies. Unlike programs written for desktop computers, for instance, software written for supercomputers is generally written for the specific machine it will be used on. It won’t work optimally (or at all) if shifted to a different machine. So each time supercomputers leap forward a generation, an ongoing research project has to write new software for a new machine. “During a decade of code development you may have three different computing architectures come and go,” Habib says. “So that’s the magic of HACC.”

HACC isn’t so much magic as smart design. Its modular construction means part of the underlying software works the same on all machines, so it’s far easier to port it from one machine (or generation of machine) to another. The other piece is a pluggable software module that can be optimized for each particular machine. This drastically reduces the amount of time researchers have to spend waiting on new code development before pushing their research forward. And when a new computer comes online, like Oak Ridge National Labs’ brand-new 20-petaflop Titan, it’s relatively simple for researchers to quickly apply jumps in computing power to their research.

Its customizable, optimizable nature also means HACC can be applied to many research projects rather than just the one for which it was originally designed. And it’s creators want HACC to be hacked. “We’re a small team, so we can’t really exploit all the scientific capabilities of this code ourselves, nor do we necessarily want to,” Habib says of HACC, adding “it’s a kind of code that other teams need to write, not just for cosmology but for other applications.” Habib, Heitmann, and their colleagues see HACC as a community resource–not only in the sense that they plan to share their cosmology results freely with the scientific community, but also in the sense that the software itself can potentially be altered and adapted to any number of modeling applications in other fields.

SO WILL THESE SIMULATIONS SOLVE THE MYSTERY OF THE DARK UNIVERSE?

No. Or at least the possibility is very, very remote. But it will influence some existing theories, send others to the discard heap, and otherwise focus the current line of thinking and future lines of questioning into these mysterious forces. And in the offing, these simulations will help researchers continue to refine the tools they use to leverage the ongoing explosion in supercomputing power into meaningful scientific gains. If Mira solves the mystery of dark energy in the coming months, we’ll be surprised. We’ll be equally surprised if it doesn’t push the field of theoretical cosmology noticeably forward. And if HACC doesn’t help accelerate the pace of supercomputer science at large, we’d be equally stunned.

Correction: An earlier version of this story inaccurately stated that Salman Habib and Katrin Heitmann are researchers at Los Alamos National Laboratory. They are currently employed by Argonne National Laboratory. The story has ben amended to reflect this.