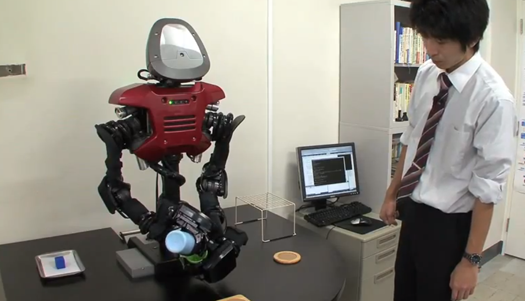

Video: A Robot That Can Figure Out New Tasks Based On the Ones It Knows

Pour a robot a glass of water, and you quench its thirst for a day. But teach a robot to...

Pour a robot a glass of water, and you quench its thirst for a day. But teach a robot to pour a cup of water and you are somewhere on par with researchers at Tokyo Institute of Technology’s Hasegawa Group. Roboticists and software engineers there have implemented a kind of self-replicating neural technology into their robot that enables it not only to perform tasks but also to learn as it goes, integrating prior knowledge into new tasks and environments.

In other words, the robot learns as it goes. This ability is imparted by an algorithmic technology called the Self-Organizing Incremental Neural Network (SOINN) developed by the researchers to give their ‘bot more mental dexterity. To borrow an example from the video below, the robot can fill up a glass of water from a bottle via pre-programmed instructions. But if halfway through the task its overseer asks it to chill the water, the robot will actually stop and think about the next steps.

Figuring that it can’t grab an ice cube until it empties one of its two hands, it then reasons that the water bottle is more expendable than the glass of water and sets the bottle down. It then grabs the ice and drops the cube into the glass. Task completed, no extra programming necessary.

All that might seem anticlimactic, but it’s it’s pretty impressive from an AI perspective. The ability to adapt to new situations and learn from non-programmed past experiences is one that robotic systems sorely lack. Coupled with the ability to go online and ask other robots–robots that are also learning on the fly–how to perform a certain task, a global network of such robots could quickly assemble a vast body of know-how from which to draw, making every robot in the network smarter.