At 18 months old, babies have begun to make conscious delineations between sentient beings and inanimate objects. But as robots get more and more advanced, those decisions may become harder to make. What causes a baby to decide a robot is more than bits of metal? As it turns out, it takes more than humanoid looks–babies rely on social interaction to make that call.

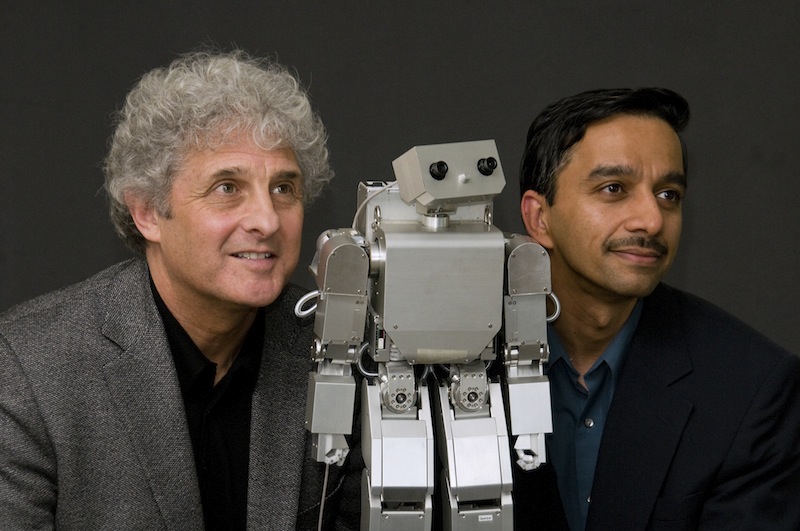

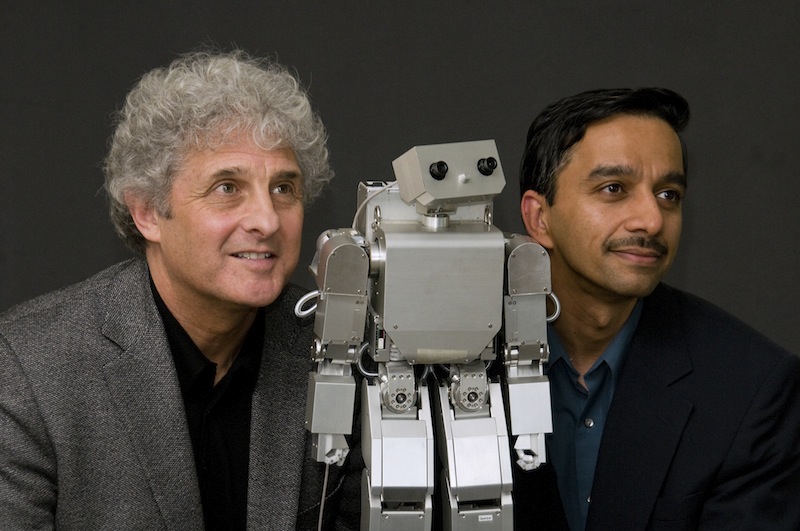

A study at the University of Washington’s Institute for Learning and Brain Sciences took a sample of 64 18-month-old babies, who were all tested individually. The experimental test had the babies sit on their parents’ laps, facing a remote-controlled humanoid robot. Sitting next to the robot was Rechele Brooks, one of the researchers on the study. Brooks and the robot (controlled remotely by an unseen researcher) would then engage in a 90-second skit, in which Brooks interacted with the robot as if it was a child, asking questions like “Where is your tummy?” and “Where is your head?” The robot would in turn point to its different parts. The robot would also imitate a few arm movements, like waving back and forth.

The babies who watched this skit looked back and forth between the robot and Brooks as if “at a ping-pong match,” said Brooks. After the skit, Brooks left the room, leaving the baby and the robot alone (well, along with the baby’s parent–this probably isn’t a punching or stabbing robot, but there’s no harm in being extra safe). The robot would then beep and shift slightly to get the baby’s attention, and then turn to look at a nearby toy.

In 13 out of 16 cases, the baby would follow the robot’s gaze, suggesting that the baby sees the robot as a sentient being, that what the robot looks at might be of interest to the baby as well. Babies at that age distinguish between, say, a swivel chair’s movement and a person’s movement, and will only follow the person. But in following the robot, the study suggests that the baby has decided that robot is a human being.

The control experiment is very similar, except the 90-second skit is omitted. The baby is familiarized with the robot, but does not see it interact with a third party. In this situation, the baby only followed the robot’s line of sight in three out of 16 instances, which is a big enough difference to suggest that socialization plays a major part in the baby’s decision to treat a robot like a being.

It’s a really interesting idea: What makes a baby decide that something is a being is not necessarily that thing’s visual similarity to a person. Appearance isn’t everything–babies recognize the ability to interact socially as a human trait. From the mouths of babes, sort of.