Lie Like A Lady: The Profoundly Weird, Gender-Specific Roots Of The Turing Test

By now, you may have heard that the Turing Test, that hallowed old test of machine intelligence proposed by pioneering...

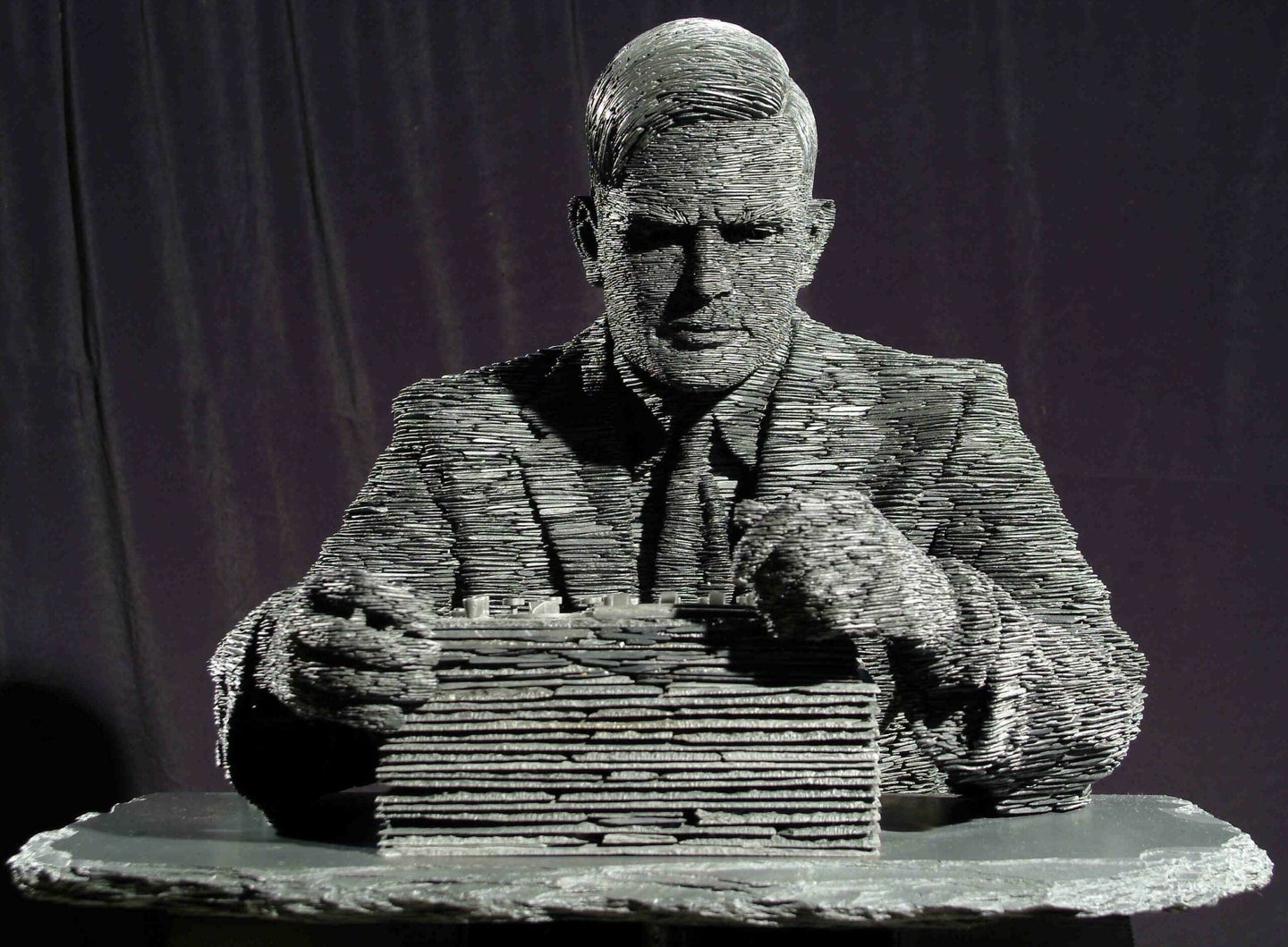

By now, you may have heard that the Turing Test, that hallowed old test of machine intelligence proposed by pioneering mathematician Alan Turing in 1950, has been passed. In a contest held this past weekend, a chatbot posing as a 13-year-old Ukrainian boy fooled a third of its human judges into thinking it was a human. This prompted the University of Reading, which had organized the competition, to announce the acheivment of “an historic milestone in artificial intelligence.”

You may have also heard that this was a complete sham, and the academic equivalent of urinating directly on Turing’s grave. Turing imagined a benchmark that would answer the question, “Can machines think,” with a resounding yes, demonstrating some level of human-like cognition. Instead, the researchers who built the winning program, “Eugene Goostman,” engaged in outright trickery. Like every chatbot before it, Eugene evaded questions, rather than processing their content and returning a truly relevant answer. And it used possibly the dirtiest trick of all. In a two-part deception, Eugene’s broken English could be explained away by not being a native speaker, and its general stupidity could be justified by its being a kid (no offense, 13-year-olds). Instead of passing the Turing Test, the researchers gamed it. They aren’t the first—Cleverbot was considered by some to have passed in 2011—but as of right now, they’re the most famous.

What you may not have heard, though, is how profoundly bizarre Alan Turing’s original proposed test was. Much like the Uncanny Valley, the Turing Test is a seed of an idea that’s been warped and reinterpreted into scientific canon. The University of Reading deserves to be ridiculed for claiming that its zany publicity stunt is a groundbreaking milestone in AI research. But the test it’s desecrating deserves some scrutiny, too.

“Turing never proposed a test in which a computer pretends to be human,” says Karl MacDorman, an associate professor of human-computer interaction at Indiana University. “Turing proposed an imitation game in which a man and a computer compete in pretending to be a woman. In this competition, the computer was pretending to be a 13-year-old boy, not a woman, and it was in a competition against itself, not a man.”

MacDorman isn’t splitting hairs with this analysis. It’s right there in the second paragraph of Turing’s landmark 1950 paper, “Computing Machinery and Intelligence,” published in 1950 in the journal Mind. He begins by describing a scenario where a man and a woman would both try to convince the remote, unseen interrogator that they are female, using type-written responses or by speaking through an intermediary. The real action, however, comes when the man in replaced by a machine. “Will the interrogator decide wrongly as often when the game is played like this as he does when the game is played between a man and a woman?” asks Turing.

The Imitation Game asks a computer to not only imitate a thinking human, but a specific gender of thinking human. It sidesteps the towering hurdles associated with creating human-like machine intelligence, and tumbles face-first into what should be a mathematician’s nightmare—the unbounded, unquantifiable quagmire of gender identity.

The imagined machine would need to understand the specific social mores and stereotypes of the country it’s pretending to hail from. It might also have to decide when its false self was born. This was in 1950, after all, just 22 years after British women were granted universal voting rights. The aftershocks of the women’s suffrage movement were still being felt. So how should a machine pretend to feel about this subject, whether as a woman of a certain age, or as a student born after those culture-realigning battles were won?

Whether a computer can pull this off seems terribly fascinating, and like an excellent research question for some distant era, long after the puzzle of artificial general intelligence is solved. But the Imitation Game is an exercise posed at the inception of the digital age, at a time when the term computer was as likely to conjure up an image of a woman crunching numbers for the Allied war effort as a machine capable of chatting about its hair.

The hair thing is Turing’s example, not mine. More on that later.

By now you might be wondering why I haven’t moved on to the Turing Test, which is surely some clarified, revised version of the Imitation Game that Turing presented in a later publication. Would that it were so. When he died in 1954, Turing hadn’t removed gender from his ground-breaking thought experiment. The Turing Test is an act of collective academic kindness, conferred on its namesake posthumously. And as it entered popular usage, it took on new meaning and significance, as the standard by which future artificial intelligences should be judged. The moment when a computer tricks its human interrogator will be the first true glimpse of machine sentience. Depending on your science fiction intake, it will be cause for celebration, or war.

In that respect, the Turing Test shares something with the Uncanny Valley, a hypothesis that’s also based on a very old paper, which also presented no experimental results, and also guesses at specific aspects of technology that wouldn’t be remotely possible for decades. In this 1970 paper, roboticist Masahiro Mori imagined a curve on a graph, with positive feelings towards robots rising steadily as those machines looked more and more human-like, before suddenly plunging. At that proposed level of human mimicry, subjects would feel unease, if not terror. Finally, the graph’s valley would form when some potential amount of perfect human-aping capability is achieved, and we don’t just like androids—we love them!

The excessive italics are my attempt to highlight the fact that, in 1970, the Uncanny Valley was based on no interactions with actual robots. It was a thought experiment. And it still is, in large part, because we haven’t achieved perfect impostors, and the related academic experiments rely not on robots, but static images and computer-generated avatars. Also, Mori himself never bothered to test his own theory, in the 44 years since he wistfully dreamt it up. (If that seems overly harsh, read the paper, co-translated by Karl MacDorman. It’s alarmingly short and florid.) Instead, he eventually wrote a book about how robots are born Buddhists. (Again, don’t take my word for it.)

But despite the flimsy, evidence-free nature of Mori’s paper, and the fact that face-to-face interactions with robots have yielded a variety of results, too complex to conform to any single curve, the Uncanny Valley is still treated by many as fact. Any why shouldn’t it be? It sounds logical. Like the Turing Test, there’s a sense of poetry to its logic, and its ramifications, which involve robots. But however it applies to your opinion of the corpse-eyed cartoons in The Polar Express, the Uncanny Valley adds nothing of value to the field of robotics. It’s science as junk food.

The Turing Test is also an overly simplified, and often unfortunately deployed concept. Its greatest legacy is the chatbot, and the competitions that try—and generally fail—to glorify the damnable things. But where the Uncanny Valley and the Turing Test differ is in their vision. The Turing Test, as we’ve come to understand it, and as last weekend’s event proves, is a hollow measure. Turing, however, was still a visionary. And in his strange, sloppy, apparently over-reaching Imitation Game, he offers a brilliant insight into the nature of human and artificial intelligence.

Talking about your hair is smarter than it sounds.

* * *

Turing’s first sample question for the Imitation Game reads, “Will X please tell me the length of his or her hair?” And the notional answer, from a human male: “My hair is shingled, and the longest strands are about nine inches long.”

Think about what’s happening in that response. The subject is picturing (presumably) someone else’s hair, or whipping up a visual from scratch. He’s included a reference to a specific haircut, too, rather than a plainly descriptive mention that it’s shorter in back.

If a machine could deliver a similar answer, it would mean one of two things.

Its programmers are great at writing scripted responses, and lucked out when it detected the word “hair.” The less cynical, pre-chatbot possibility is that the computer is able to access an image, and describe its physical characteristics, as well as its cultural context.

Making gender such a core component of a test for machine intelligence still makes me uneasy, and seems like the sort of tangential inclusion that would be lambasted by modern researchers. But what Turing sought was the ability to process data on the fly, and draw together multiple types of information. Intelligence, among other things, means understanding things like length and color, but also knowing what shingled hair is.

The Imitation Game also has better testing methodology than the standard version of the Turing Test, since it involves comparing a human’s ability to deceive with a machine’s ability to do the same. At first glance, this might seem like insanity—if this test is supposed to lead to computers that think like us, who cares if they can specifically pretend to be one gender or another? What makes the Imitation Game brilliant, though, is that it’s a contest. It sets a specific goal for programmers, instead of staging an open-ended demonstration of person-like computation. And it asks the computer to perform a task that its human competitor could also fail at. The Turing Test, on the other hand, doesn’t pit a computer against a person in an actual competition. Humans might be included as a control element, but no one expects them to fail at coming across at the most basic of all tasks—being a person.

The Imitation Game might still be vulnerable to modern chatbot techniques. As legions of flirty programs on “dating” sites can attest, falling back on lame stereotypes can be a surprisingly successful strategy for temporarily duping humans. There’s nothing perfect about Turing’s original proposal. And it shouldn’t be sacred either, considering its advanced age, and the developments in AI since it was written. But for all its problems and messy socio-cultural complications, I don’t think we’ve done Turing any favors by replacing the Imitation Game with the Turing Test. Being better than a man at pretending to be a living woman is an undeniably fraught victory condition for AI. But it’s a more contained experiment than simply aping the evasive chatroom habits of semi-literate humans, and would call for greater feats of machine cognition. After this latest cycle of breathless announcements and well-deserved backlash, no one should care when the next unthinking collection of auto-responses passes the Turing Test.

But if something beats a human at the Imitation Game?

I’m getting chills just writing that.