Bill Gates Fears A.I., But A.I. Researchers Know Better

The general obsession with superintelligence is only getting bigger, and dumber

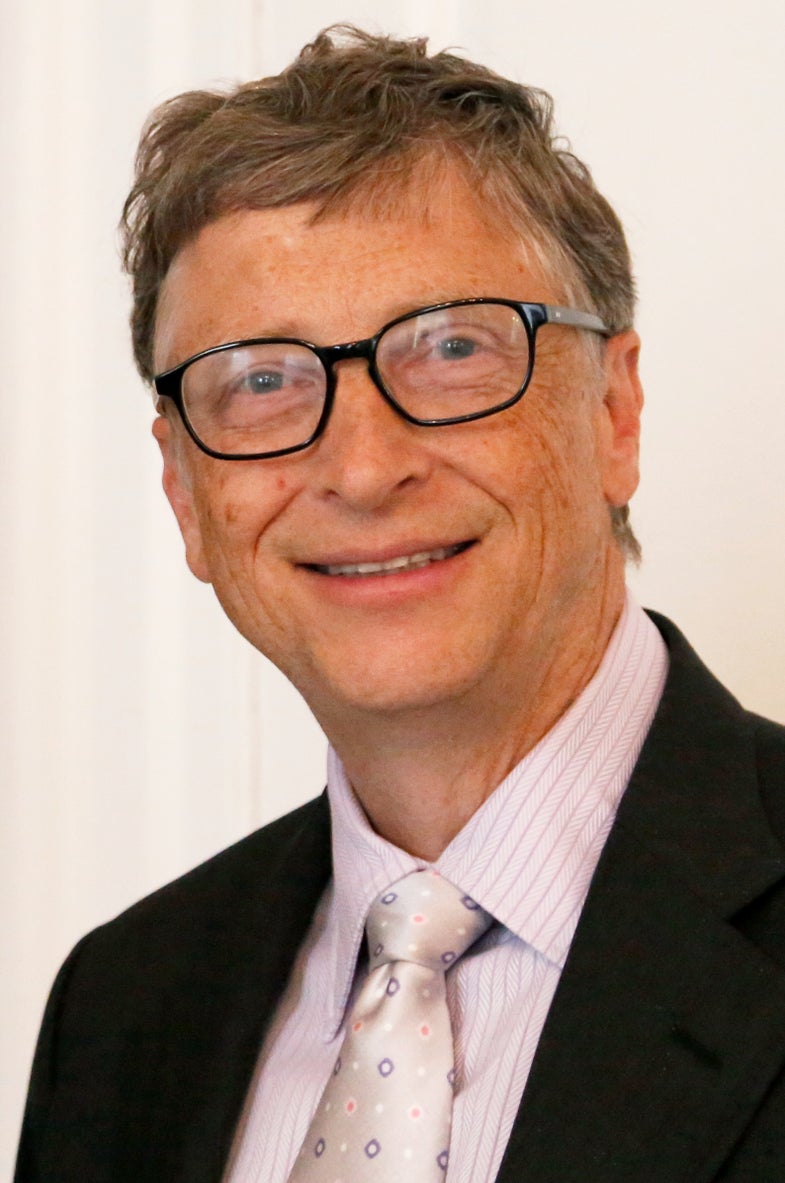

Ladies and gentlemen, please welcome Bill Gates to the A.I. panic of 2015.

During an AMA (ask me anything) session on Reddit this past Wednesday, a user by the name of beastcoin asked the founder of Microsoft a rather excellent question. “How much of an existential threat do you think machine superintelligence will be and do you believe full end-to-end encryption for all internet acitivity can do anything to protect us from that threat (eg. the more the machines can’t know, the better)??

Sadly, Gates didn’t address the second half of the question, but wrote:

For robo-phobics, the anti-artificial-intelligence dream team is nearly complete. Elon Musk and Bill Gates have the cash and the clout, while legendary cosmologist Stephen Hawking—whose widely-covered fears include both evil robots and predatory aliens—brings his incomparable intellect. All they’re missing is the muscle, someone willing to get his or hands dirty in the preemptive war with killer machines.

Actually, what these nascent A.I. Avengers really need is something even more far-fetched: any indication that artificial superintelligence is a tangible threat, or even a serious research priority.

Maybe I’m wrong, though, or too dead-set on countering the growing hysteria surrounding A.I. research to see the first glimmers of monstrous sentience gestating in today’s code. Perhaps Gates, Hawking and Musk, by virtue of being incredibly smart guys, know something that I don’t. So here’s what some prominent A.I. researchers had to say on the topic of superintelligence.

I had originally contacted these individuals for an upcoming Popular Science print story, but if there was ever a time that called for context surrounding A.I., it’s right now. In that spirit, I’m running full responses to my questions, with little to no editing or cherry-picking. So the quotes to follow are dense by design. I should also explain that I didn’t pick these researchers based on previously published opinions, as a kind of confirmation-bias peanut gallery, but because of their research history and professional experience related to machine learning and deep learning. When people discuss neural networks, cognitive computing, and the general notion of A.I. systems eventually reaching or beating human intelligence, they’re usually talking about machine and deep learning. If anyone knows whether it’s time to panic, it’s these researchers.

“Artificial superintelligence isn’t something that will be created suddenly or by accident.”

Here’s Dileep George, co-founder of A.I. startup Vicarious, on the risks of superintelligence:

This perspective from Vicarious is relevant not just because of its current work in learning-based A.I., but because Elon Musk has presented the startup as part of his peek behind the curtain. Musk told CNBC that he invested in Vicarious, “not from the standpoint of actually trying to make any investment return. It’s purely I would just like to keep an eye on what’s going on with artificial intelligence.” He also wrote that, “The leading A.I. companies have taken great steps to ensure safety. The [sic] recognize the danger, but believe that they can shape and control the digital superintelligences and prevent bad ones from escaping into the Internet. That remains to be seen…”

So I asked Vicarious what sort of time and resources they devote to safeguarding against the creation of superintelligence. This was the response from D. Scott Phoenix, the other co-founder of Vicarious:

Vicarious isn’t doing anything about superintelligence. It’s simply not on their radar. So which companies is Musk talking about, and are any of them seriously worried about, as he put it, bad superintelligences “escaping into the Internet?” How, in other words, do you build a prison for a demon (Musk’s description of A.I,. not mine) when demons aren’t real?

This is how Yann LeCun, Facebook’s director of A.I. research, and founding director of the NYU Center for Data Science, responded to the question of whether companies are actively keeping A.I. from running amok:

LeCun also addressed one of the main sources of confusion those trying to frame superintelligence as inevitable: the difference between intelligence and autonomy.

This is a crucial distinction, and one that gets to the heart of the panic being fueled by Musk, Hawking, and now Gates. A.I. can be smart without being sentient, and capable without being creative. More importantly, A.I. is not racing along some digital equivalent of a biological evolutionary track, competing and reproducing until some beneficial mutation spontaneously triggers runaway superintelligence. That’s not how A.I. works.

“We would be baffled if we could build machines that would have the intelligence of a mouse in the near future, but we are far even from that.”

A search algorithm serves up results faster than any human could, but is never going to suddenly grow a code of ethics, and wag its finger at your taste in pornography. Only a science fiction writer, or someone who isn’t a computer scientist, can imagine the puzzle of general, human-level intelligence suddenly, miraculously solving itself. And if you believe in one secular miracle, why not add another, in the form of self-assembling superintelligence?

LeCun did come to Musk’s defense, saying that his comment compared A.I. to nukes was “exaggerated, but also misinterpreted.

The researcher who had the most to say on this topic was the one referenced in that last response. Yoshua Bengio is head of the Machine Learning Laboratory at the University of Montreal, and widely considered one of the pioneering researchers in the sub-field of deep learning (along with LeCun). Here’s his response to the question of whether A.I. research is inherently dangerous:

I also asked whether superintelligence is inevitable. Bengio’s response:

And as for the notion, also proposed by Musk, that A.I. is “potentially more dangerous than nukes,” and should be handled with care, Bengio addressed the present, without slamming the door on the future unknown.

The common thread in these responses is time. When you talk to A.I. researchers—again, genuine A.I. researchers, people who grapple with making systems that work at all, much less work too well—they are not worried about superintelligence sneaking up on them, now or in the future. Contrary to the spooky stories that Musk seems intent on telling, A.I. researchers aren’t frantically installed firewalled summoning chambers and self-destruct countdowns. At best, these scientists are pondering the question of superintelligence. It’s not a binary situation. The difference between panic and caution is a matter of degree. If the people most familiar with the state of A.I. research see superintelligence as a low-priority research topic, why are we letting random, unsupported comments from anyone—yes, even Bill Gates—convince us otherwise?