MIT Demonstrates Smart Cars That Predict Each Others’ Moves to Avoid Collisions

Someday, our cars will all be connected to each other, sharing traffic information, connecting us into “road trains,” and swapping...

Someday, our cars will all be connected to each other, sharing traffic information, connecting us into “road trains,” and swapping position info so that collisions become a thing of the un-wired past. But even if new cars came equipped with such networking tools tomorrow–and they won’t–it would be decades before every car on the road was wired into the system. So MIT researchers are taking a different tack, modeling human driving behavior to create algorithms that can help computerized cars predict what human drivers are going to do next.

To suss out the patterns in human driving, the team first had to break down the act of operating a moving vehicle into its most basic parts: accelerating and decelerating. Using onboard sensors, the computerized intelligent transportation system (ITS) first determines which state another vehicle is in. From there, there is a finite (but sill large) number of positions on the roadway the vehicle can be after any given duration, be it one second or ten.

It’s here that the human behavioral modeling comes into play. The computer assesses other factors (is it an intersection or an onramp?) and other data about where human drivers tend to accelerate or slow down. All this, filtered through an algorithm, gives the ITS a pretty good idea of where a vehicle might be immediately headed.

The ITS-equipped vehicle then quickly figures out the areas in which the two vehicles could theoretically collide (this is termed the “capture set”) decides what it thinks the other car is going to do, acting accordingly to avoid those “capture set” areas where the risk of collision is remarkably more pronounced.

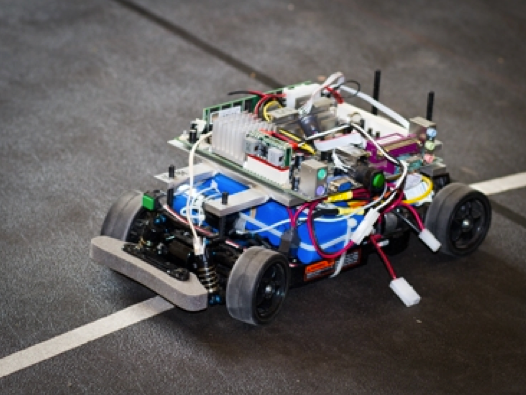

To test the system, the MIT team built two miniature cars–one equipped with ITS, the other controlled by human drivers–and put them on circular, overlapping tracks. They then ran 100 trials, changing up the human driver to compensate for any particular driver’s style. The result: collision was avoided 97 times. Vehicles entered the “capture set” three times, and only one of these instances resulted in collision.

Not bad. Of course, all or this has to take place in an instant in the real world, and adding more cars and more variables (pedestrians and cyclists, for instance) compounds the challenges. But the work is important for reasons that go beyond the roadway. If we’re truly going to learn to live alongside our robots, we don’t just need to know what they are going to do next. To some degree, they need to be able to predict our next moves as well.