The Best Measurement Yet of Earth’s Radioactivity Shows Half the Earth’s Heat Comes from Nuclear Decay

Scientists measuring the subatomic particles flowing from Earth’s interior have taken the most precise measurement ever gathered of the home...

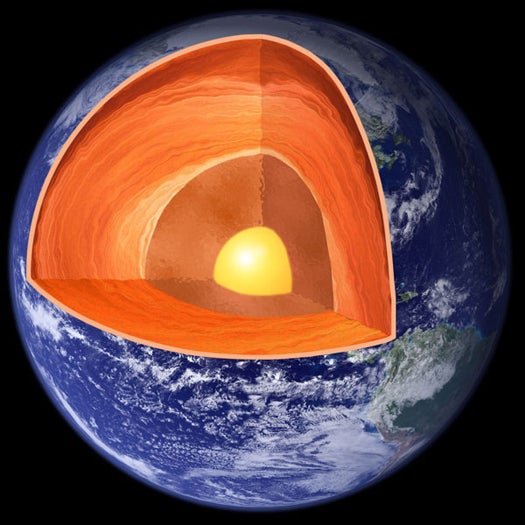

Scientists measuring the subatomic particles flowing from Earth’s interior have taken the most precise measurement ever gathered of the home planet’s radioactivity. It turns out nearly half of the Earth’s total heat output comes form decaying radioactive elements like thorium and uranium in the Earth’s crust. But that’s an answer that begets more questions.

Previously, there were varying theories on just how much of the Earth’s heat came from radioactive decay, with many–accurately, it would seem–placing that value at about half of the roughly 40 terawatts of heat produced by the Earth. That heat drives convection currents in the outer core and give us our magnetic field, among other natural processes (tectonics come to mind).

The measurement was taken at the KamLAND detector in Japan, which has been tallying up the flux of antineutrinos flowing outward from the inner Earth since 2005 (it takes a while to gather a meaningful quantity of antineutrinos). And, as usually happens, the answering of one question leads to many more.

For instance, we now know that radioactive decay accounts for half the Earth’s heat. But what of the other half? Some can surely be accounted for by what’s called primordial heat–heat leftover from the Earth’s violent formation. But whether or not that could account for all of that remaining 20 terawatts is unknown (and some think unlikely).

So even though we now have a pretty good handle on how much heat comes from radioactive decay, we still have plenty of questions about the Earth’s makeup that need answering. Feel free to theorize in the comments below.