You Shouldn’t Be Afraid Of That Killer Volkswagen Robot

Robolaw expert Ryan Calo says to stop worrying and love the machine

Headlines rang out across the internet yesterday that a robot killed someone in Germany. Beneath the sensationalist surface, there was a tragic truth: an industrial robot at a Volkswagen plant in Germany had indeed killed a 22-year-old worker who was setting it up. Coverage notwithstanding, this didn’t seem like the start of a machine-led apocalypse–I wanted a second opinion before heading to my backyard bunker. Ryan Calo is a law professor at the University of Washington, and he’s published academic works on our coming robot future, and the interaction between robots and cyberlaw.

I sent Calo some questions by email, and below have paired them with his responses. I’ve also added links, where appropriate.

Popular Science: Yesterday Twitter was all abuzz about an industrial robot killing someone. You said at the time “this is relatively common.” What did you mean by that?

Ryan Calo: In the United States alone, about one person per year is killed by an industrial robot. The Department of Labor keeps a log of such events with titles like “Employee Is Killed When Crushed By Robot” (2006) or “Employee Was Killed By Industrial Robots” (2004).

You’ve written before about the potential for unique errors from autonomous machines. In future “robot kills man” stories, what characteristics should we look out for that make something go from “industrial accident” to “error with autonomy”?

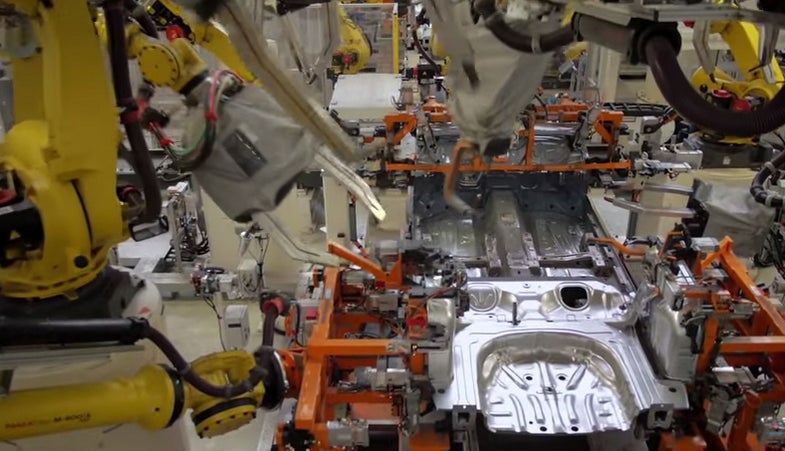

Right. Industrial robots tend to do the same thing again and again, like grabbing and moving, and cannot generally tell what it is they are working with. That’s why factories establish “danger” or “kill” zones that people have to stay out of while the robot is operating. I write about the prospect that future robots will display not autonomy but emergent behavior. Emergent behavior, as defined by Stephen Johnson in his excellent book, refers to activity that is useful but surprising. Sometimes things will happen that horrify the coders—that they never anticipated. For example, Google’s image algorithm recently tagged a picture of two people of color as “gorillas.” Google was properly mortified.

I talk about what happens when we start to see physical robots that can display emergent behavior. There the kinds of accidents are different and less likely to involve error on the part of the victim, but are perhaps no more predictable than Google’s tagging algorithm.

Initial reports attribute the death to human error. At what point do you think having a human “in the loop” for an autonomous system constitutes a liability, instead of a safety feature?

In industrial robotics, that ship has long sailed. You couldn’t have a person in the loop and maintain anything like today’s productivity. Rather, you have to try to make sure–through protocols, warnings, etc.–that people stay out of the robot’s way.

Discussion about keeping humans “in the loop” so that robots don’t kill is of course a big part of the debate when it comes to autonomous weapons. There is widespread agreement that people maintain “meaningful human control” but little sense of what that means.

Should we accept accidents involving machines as a routine part of life, like we do with automobile injuries? Or do hyperbolic “robot kills man” headlines come with any cautionary benefit?

Sometimes I worry that fear of robots will result in a net loss of human life. I tell a story about a driverless car that is faced with a choice between running into a grocery cart or running into a stroller. Say the driverless car is 20 times safer than a human driver. But if it picks wrong in my scenario, the headline reads “Robot Kills Baby to Save Groceries” and sets autonomous driving back a decade.

Okay, but how do I know you aren’t yourself a robot trying to lure us into a false sense of safety before the machines all take over?

I’m afraid I can’t answer that, Kelsey.