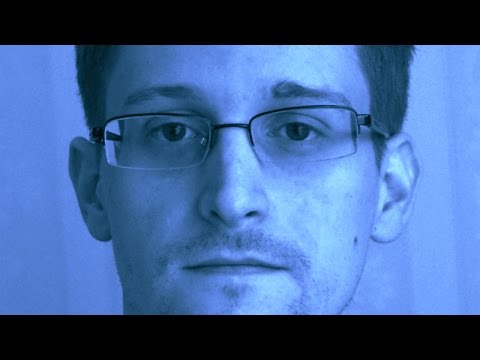

Edward Snowden: The Internet Is Broken

The activist talks to Popular Science about digital naïveté

In 2013, a now-infamous government contractor named Edward Snowden shined a stark light on our vulnerable communications infrastructure by leaking 10,000 classified U.S. documents to the world.

One by one, they detailed a mass surveillance program in which the National Security Administration and others gathered information on citizens — via phone tracking and tapping undersea Internet cables.

Three years after igniting a controversy over personal privacy, public security, and online rights that he is still very much a part of, Snowden spoke with Popular Science in December 2015 and shared his thoughts on what’s still wrong and how to fix it.

Popular Science: How has the Internet changed in the three years since the release?

Edward Snowden: There have been a tremendous number of changes that have happened, and not just on the Internet. It has changed our culture, it has changed our laws, it’s changed the way our courts decide issues, its changed the way people consider what the Internet means to the them, what their communication security means to them.

The Internet as a technological development has reached within the walls of every home. Even if you don’t use it, even if you don’t have a smart phone, even if you don’t have a laptop or an Internet connection or a phone line, your information is handled by tax authorities, by health providers and hospitals, and all of that routes over the Internet.

This is both a force for tremendous good but it is something that can be abused. It can be abused by small time actors and criminals. It can also be abused by states. And this is what we really learned in 2013. During an arrest, police traditionally have had the ability to search anything they find on your person — if you had a note in your pocket, they could read it. But now we all carry smartphones on us, and smartphones don’t just have this piece of ID, or your shopping list, or your Metrocard. Your entire life now fits in your pocket. And it was not until after 2013 that the courts were forced to confront this decision.

In the post-9/11 era, in the context of this terrorism threat that has been very heavily promoted by two successive administrations now, there was this idea that we had to go to the dark side to be able to confront the threat posed by bad guys. We had to adopt their methods for ourselves.

We saw the widening embrace of things like warrantless wire-tapping during the Bush administration, as well as things like torture1. But in 2014, there was the Riley decision that went to the Supreme Court — that was one of the most significant changes.2

Which is that in the Riley decision, the courts have finally recognized that digital is different. They recognized that the unlimited access of government to continuum of your private information and private activities, whether that is the content of your communication or the meta data of your communications, when it is aggregated it means something completely different than what our laws have been treating it as previously.

It does not follow that police and the government then have the authority to search through your entire life in your pocket just because you are pulled over for a broken taillight. When we change this over to the technical fabric of the Internet, our communications exist in an extraordinarily vulnerable state, and we have to find ways of enforcing the rights that are inherent to our nature. They are not granted by government, they are guaranteed by government — the reality is a recognition of your rights, which includes your right to be left alone (as the courts describe privacy) and to be free from unreasonable search and seizure, as we have in our Fourth Amendment..

And one of the most measurable changes is guaranteeing those rights, regardless of where you are at and regardless of where the system is being used, through encryption. Now it is not the magic bullet, but it is pretty good protection for the rights we enjoy.

About eight months out from the original revelations, in early January 2014, Google’s metrics showed there was a 50 percent increase in the amount of encrypted traffic that their browsers were handling3. This is because all of the mainline Internet service providers — Gmail, Facebook, and even major website providers — are encrypted, and this is very valuable. You can enforce a level of protection for your communications simply by taking very minor technical changes.

And this is the most fascinating aspect. Encryption moves from an esoteric practice to the mainstream. Does that become universal, five to 10 years later?

Yeah, the easiest way to analogize this is that 2013 was the “atomic moment” for the profession of computer scientists and the field of technologists. The nuclear physicists of a previous era were just fascinated with their capabilities and what secrets they could unlock, but didn’t consider how these powers would be used in their most extreme forms.

It is the same way in technology. We have been expanding and expanding because technology is incredibly useful. It is incredibly beneficial. But at the same time, we technologists as a class knew academically that these capabilities could be abused, but nobody actually believed they would be abused. Because why would you do that? It seemed so antisocial as a basic concept.

But we were confronted with documented evidence in 2013 that even what most people would consider to be a fairly forthright upstanding government was abusing these capabilities in the most indiscriminate way. They had created a system of “bulk collection”, as the government likes to describe it — the public calls it mass surveillance. It affected everybody. It affected people overseas and at home, and it violated our own Constitution. And the courts have now ruled multiple times that it did do so4.

Prior to 2013, everybody who thought about the concept of mass surveillance either had to consider it an academic concept, or they were a conspiracy theorist. Now, though, we have moved from the realm of theory to the realm of fact. We are dealing with actual credible and documented threats, and because of that, we can actually start to think about how do we deal with that? How do we remedy the threats?

And how do we provide security for everybody?

And Brazil recently shut down WhatsApp.

Right5, and this is more topical6. Because of the way the WhatsApp service is structured, the largest messaging service in the world doesn’t know what you are saying. It doesn’t hold your messages, it doesn’t store your messages in a way that it can read. Which is much safer against abuse than if you simply have AT&T holding a record of every text message you’ve ever sent.

During the first crypto-war in the 1990s, the NSA and the FBI asked for backdoors for all the world’s communications that were running on American systems. The NSA designed a chip called the Clipper chip that encrypted the communications in a way that they could be broken by the government, but your kid sister wouldn’t be able to read them6. The NSA said no one is actually going to be able to break this — it is not a real security weakness, it is a theoretical security weakness.

Well, there was a computer scientist at AT&T Bell Laboratories, Matt Blaze, who is now a professor at University of Pennsylvania, who took a look at his chip and as a single individual, broke the encryption, which the government said was unbreakable7. Only they could break it. This is what is called ‘nobody but us’ sort of surveillance. Well, the thing is, it is very difficult to substitute the judgment of ten engineers behind closed doors in a government lab somewhere for the entire population of the world, and say these ten guys are smarter than everybody else. We know that doesn’t work.

This leads the question of the future. Technology progresses at what we see appears to be an accelerating rate. Before 2013, before we had a leg to stand on and say this is what is actually going on, we had developed a panopticon8, which no one outside of the security services was fully authorized to know. Even members of Congress, like Ron Wyden, were being lied to on camera by the top intelligence officials9 of the United States — what if we were never able to take any steps to correct the balance there?

Prior to 2013, everything we did on the Internet was more or less simply because no one wanted to make the effort. There were capabilities that existed. There were tools that existed. But by and large, everything we did on the Internet, as we engaged on the Internet, we were electronically naked, and this is really the most lasting impact is for the classes of cryptographers and security engineers that recognize the path across the network is hostile terrain.

And this has now changed significantly, with Tor and Signal.

We are starting to see a sense of obligation on the part of technologists to clothe the users. And users isn’t the best language to use. We use users, we use customers as a sector, but we mean people.

And this is not just the United States’ problem, it is a global problem. One of the primary arguments used by apologists for this surveillance state that has developed across the United States and in every country worldwide is a trust of the government. This is critical — even if you trust the U.S. government and their laws, we’ve reformed this issues, think about the governments you fear the most, whether it is China, Russia or North Korea, or Iran. These spying capabilities exist for everyone.

Technically they are not very far out of reach. The offense is easier than the defense, or has been, but that is beginning to change. We can move this status quo to a dynamic where everyone is safe.

Protecting the sanctity of critical infrastructure of communications online is not a luxury good or right. It is a public necessity, because of what is described as the cyber-security problem. Look at the Sony hack10 in late 2014, or the Office of Personnel Management hack11 last summer, where the federal government — arguably the world’s most well-resourced actor — got comprehensively hacked. They weren’t using any form of encryption to protect the incredibly sensitive records of people who have top secret clearances. The only way to provide security in this context is to provide it for everyone. Security in the digital world is not something that can be selective.

There is a seminal paper called ‘Keys Under Doormats‘. It’s really good. The idea here is that if you weaken security for an individual or for a class of individuals, you weaken it for everyone. What you are doing is you’re putting holes in systems, keys under doormats, and those keys can be found by our adversaries as well as those we trust.

Is there any way that the technology and intelligence communities can reconcile?

There actually is. The solution here is for both sides of the equation to recognize that security premised on a foundation of trust is, by its very nature, insecure. Trust is transient. It isn’t permanent. It changes based on situations, it changes based on administrations.

Let’s say you trust President Obama with the most extreme powers of mass surveillance, and think he won’t abuse them. Would you think the same thing about a President Donald Trump, having his hand on the same steering wheel? And these are dynamics that change very quickly.

This is not just an American thing; this is happening in every country in every part of the world. We first need to move beyond the argumentation by policy officials of wishing for something that is technically impossible. The idea ‘Let’s get rid of encryption’. It is out of their hands. The jurisdiction of Congress ends at its borders. Even if all strong encryption is banned in the United States because we don’t want Al Qaeda to have it, we can’t stop a group from developing these tools in Yemen, or in Afghanistan, or any other region of the world and spreading the tools globally.

We already know the program code, and again, we dealt with this in the ’90s. It is a genie that won’t go back in the bottle.

Once we move beyond what legislation can accomplish, we need to think about what it should accomplish. There is an argument where the government says, ‘You should give up a lot of your liberty because it’ll give us some benefit in terms of investigatory powers, and we believe it might lead to greater security.’ But security, surveillance, and privacy are not contrary goals. You don’t give up one and get more of the other. If you lose one, you lose the other. If you are always observed and always monitored, you are more vulnerable to abuse than you were before.

They are saying we are balancing something, but it is a false premise. When you can’t protect yourself, you are more vulnerable to the depredations of others, whether they are criminal groups, government, or whomever. What you can’t have is what the courts have referred to as the right to be left alone, in which you can selectively participate and share. You can’t experiment or engage in an unconsidered conversation with your friends and your family because you’ll worry what that is going to look like in a government or corporate database 20 or 30 years down the road.

There are those who argue we need get rid of that. All of this individuality is dangerous for large and well-organized societies. We need people who are observed and controlled because it is safer. That may be a lot of things, but the one thing I’d argue it is not is American.

We are comfortable sharing our data with Amazon, but not the government. Does that seem counterintuitive?

When we think about privacy, what we are describing is liberty. We are describing a right to be left alone. We can always choose to waive that right, and this is the fundamental difference between corporate data collection and government surveillance from every sort of two bit government in the world.

You can choose not to use Amazon, or log onto Facebook12 — you can’t opt out of governmental mass surveillance that watches everybody in the world without regard to any suspicious criminal activity or any kind of wrong doing. This is the challenge.

It’s not that all surveillance is bad. We don’t want to restrict the police from doing anything. The idea is that traditional and effective means of an investigation don’t target a platform, a service, or a class. If you were to stop a terrorist attack, you target a suspect, an individual. That is the only way you can discriminate and properly apply the vast range of military and law enforcement intelligence capabilities. Otherwise, you are looking at a suspect pool of roughly 7 billion people in the world.

This is the reason mass surveillance doesn’t work. You don’t have to take my word for it, particularly in the context of public communication. You can cite the Privacy and Civil Liberties Oversight Board’s review on section 21513, and their specific quotes, this is their words, “We are aware of no instance in which the [mass surveillance] program directly contributed to the discovery of a previously unknown terrorist plot or the disruption of a terrorist attack14.”

This begs the question: why? Why doesn’t mass surveillance work? That is the problem with false positives and false negatives. If you go look, our program is 99.9 percent effective, and that sounds really good, but when you think about that in the context of a program, that means one out of every thousand people is going to be inaccurately identified as a terrorist, or one out of every thousand terrorists is actually going to be let go by the system, and considered to not be a terrorist.

And the real problem is that our algorithms are not 99.9 percent effective. They are about 80 percent effective at best. And when you upscale that to the population of the entire world, even if they were 99.99999 percent effective, suddenly you are transforming millions of completely innocent people into terrorists. At the same time, you are transforming tons of actual terrorists, whom any police officer, after a cursory review of their actions, would say ‘That’s suspicious,’ into law-abiding citizens. That is the fundamental problem there, and why it hasn’t worked, so if is hasn’t been effective, why are they doing it? It costs a lot of money, so why deal with it at all?

And why do we continue to have these same conversations?

These programs were never about terrorism. They are not effective for terrorism. But they are useful for a lot of other things, like espionage, diplomatic manipulation, and ultimately social control.

Imagine yourself sitting at a desk, and you have a little box that lets you search anybody’s email in the world; it lets you pull up their entire web history, anything they’ve ever typed into a search engine; you can read the message they are typing on Facebook as they do it; you can turn on the webcam on any private home; you can follow where anyone goes through their cell phone at any time. This is obviously an extraordinarily valuable mechanism of influence, of power, of capability.

What it doesn’t do, though, is stop terrorist attacks.

And this is one of the fundamental problems of the public debate. The officials who are promoting and desire these capabilities recognize this — ‘Look, it’ll give us an advantage in foreign intelligence collection. It’ll allow us to compete on a stronger basis in the global economic market.’ These are arguments they still might win because people may be OK with that bargain: ‘That’s fine. I don’t care if you spy on foreigners. I don’t care if you commit economic espionage as long as it benefits us. I don’t care if you are monitoring protestors because I don’t agree with protestors.’

But that is a very different argument, and one that is more difficult to win, than saying this will save lives, this will stop terrorism, and this is the solution to our problems.

Most people can get behind surveillance as a means to saving lives, but to commit economic espionage, or just spying for spying’s sake, the arguments are more difficult to make.

Right. And they have been making this argument since 2001, but we are now in 2016. To me personally, this is why I think the environment, and the response, has changed so much since 2013. They said, ‘What this guy did was dangerous. The press was irresponsible reviewing classified programs. Even if [the NSA] did violate the law, even if they did violate the constitution, people will die over this.’

Since 2013, all the top officials at the NSA and the CIA have been brought on the floor of Congress, and Congress has begged them repeatedly, Can you show us any cases? Name a single person who has died as a result of these disclosures? And they’ve never been able to do that. They’ve never been able to show a particular national security operation that has been damaged as a result.15

The dynamic here is the same — it had been easy to make the argument that you should be afraid because we just don’t know. That argument is no longer the case 15 years later.

The rise of hacktivism and white hat hackers, then, seems like a direct argument against that.

There are a number of organizations around the world, like the TOR project, that, even if they can’t solve the problem, they are improving the status quo that people are dealing with around the world. Even if you, sitting in Chicago, are being comprehensively surveilled, you might not be concerned. But if you allow that to happen simply because you don’t care about its impact, you are ignoring the collective impact it has on everyone else. This is the fundamental nature of rights. Arguing for surveillance because you have nothing to hide is no different than making the claim, ‘I don’t care about freedom of speech because I have nothing to say.’

Rights are not just individual. They are collective and universal. And I am now working at the Freedom of the Press Foundation to look at: How do we help people in the most difficult circumstances, and who face the most severe threats of surveillance?

Politicians are trying to convince the public to rely on security that is premised on the idea of trust. This is the current political problem: ‘Let us do this stuff, and we won’t abuse it.’ But that trust is gone. They violated it.

There is a technical paradigm that is being shifted to where we no longer need to trust the people handling our communication. We simply will not give them the capability to abuse it in the first place. We are not going to bare our breast for them to drive the knife in if they change their mind about us.

You have said, and I am paraphrasing, that the Internet allowed you to explore your capacity as a human being. What does that mean for future generations more immersed online?

Let’s think about the example of AT&T sharing with the government more than 26 years of phone records16. That’s the full span of these people’s lives. They’ll never have made a phone call on AT&T that hasn’t been captured. Their very first AT&T call, when they were four years old and called their mom, has been recorded. And the argument — ‘It is just metadata. This is just your phone bills and calling records’ — misunderstands what it really is and why it matters.

Metadata is the technical word for an activity record, so the government has been aggregating perfect records of private lives. And when you have all of someone’s phone records, purchase records, every website they’ve ever visited or typed into Google, or liked on Facebook, every cell phone tower their phone has ever passed and at what time, and what other cell phones were at that tower with them, what you’ve done is you’ve written a secret biography of every person that even they themselves don’t know.

When we think about surveillance as being a mechanism of control, at the lowest level it means that this young cohort is growing up in a society that has transformed from an open society to a quantified society. And there is no action or activity they could take that could be unobserved and truly free. People will say ‘We trust we’ll be ok,’ but this is an entire cohort that at any moment in the future could have their life changed permanently. And this is what I described in that first interview as ‘turnkey tyranny‘17.

It’s not that we think of it as evil. It’s that we’ve said for generations that absolute power corrupts absolutely, and this is a country where the Supreme Court said two years ago that the American Revolution was actually kicked off in response to general warrants of the same character that are happening in the U.S. today.18

Let’s interject a bit of levity to the discussion. Pop culture has garnered praise recently for accurately portraying those threats, and depicting hacking. Do you partake in shows like ‘Mr. Robot’?

Hollywood is only going to be so accurate in the technical sense, but yes, I do watch it. [Television and movies] are improving slowly. They are certainly better than the neon 3D virtual city back in the ’80s. But it is going to be a long road.

There is also a very interesting cultural dynamic we see shifting. For example, Captain America, in the recent Winter Soldier movie, quite openly questioned whether it is patriotism to have absolute loyalty to a government, is it more critical to have loyalty to the country’s values? There is that old saying, ‘my country, right or wrong,’ that was criticized for a long time as blindly jingoistic, but eventually it has been reformulated, “My country right or wrong. Right to be kept right, wrong to be put right.”

And this is something we are rediscovering. It is critical that the United States not just be a strong and a powerful country. We have to have a moral authority to recognize that we have the capability to exercise certain powers, but we don’t. Even though it would provide us an advantage, we realize it is something that would lose us something that is far more valuable. We saw this in the Cold War that we forgot about in the immediate post 9/11 moment.

With the benefit of hindsight, what would you have done differently in 2013?

It was never my goal to fundamentally change society. I didn’t want to say what things should or shouldn’t be done. I wanted the public to have the capability and the right to decide for themselves and to help direct the government in the future. Who holds the leash of government? Is it the American people, or is it a few people sitting behind closed doors?

And I think we have been effective in getting a little bit closer to the right balance there. We haven’t solved everything. But no single person acting in a vacuum is going to be able to solve problems so large on their own. And none of this would have happened without the work of journalists.

Would I have done anything different? I should have come forward sooner. I had too much faith that the government really would do no wrong. I was drinking the Kool-Aid in the post-9/11 moment. I believed the claims of government, that this was a just cause, a moral cause, and we don’t need to listen to these people who say we broke this law or that law. No one could really prove with finality that this was not the case, that the government was actually lying.

One of the biggest legacies is the change of trust. Officials at the NSA and the CIA were seen as James Bond types, but now, they are seen as war criminals. At the same time, people like Ashkan Soltani was hired to the White House. He had been reporting on the archive in 2013 and printing classified information to the detriment of these people19. There is this really interesting dynamic where the people you would presume would be persona non gratis in Washington are now the ones in the White House, and the ones previously in the White House are now exiled and are being asked ‘Why haven’t you been prosecuted?’ It gives the flavor of that change.

So how does the Internet look now, after this seminal moment? We have more encryption that ever before, air gapped laptops, burner phones. How do you see our changing relationship with the Internet in the coming decades?

We are at a fork in the road. We’ll move into a future that is just a direct progression from the pre-2013 development of technology, which is where you can’t trust your phone. You would need some other device. You would need to act like a spy to pursue a career in a field like journalism because you are always being watched.

On the other hand, there is the idea you don’t need to use these fancy trade craft methods. You don’t need to worry about your phone spying on you because you don’t need to trust your phone. Instead of changing your phone to change your persona — divorcing your journalist phone from your personal phone — you can use the systems that are surrounding us all of the time to move between personas. If you want to call a cab, the cab doesn’t need to know about who you are or your payment details.

You should be able to buy a bottle of Internet like you buy a bottle of water. There is the technical capacity to tokenize and to commoditize access in a way that we can divorce it from identity in such a way that we stop creating these trails. We have been creating these activity records of everything we do as we go about our daily business as a byproduct of living life. This is a form of pollution; just as during the Industrial Revolution, when a person in Pittsburgh couldn’t see from one corner to another because there was so much soot in the air. We can make data start working for us rather than against us. We just simply need to change the way we look at it.

This interview has been edited and condensed.

This article was originally published in the May/June 2016 issue of Popular Science, under the title “The Internet is Broken.”

Footnotes

- The Torture Memo, co-authored by John Yoo, a lawyer at the Justice Department, authorized enhanced interrogation techniques to be used against ‘unlawful combatants’ in the wake of 9/11. This included ‘abdominal slaps’, ‘longtime standing’, and ‘simulated drowning’ — aka waterboarding.

- The Supreme Court unanimously ruled that police need to obtain a warrant before searching a cell phone of someone that has been arrested.

- According to Google’s most recent Transparency Report, filed in March 2016, 77 percent of traffic on Google’s servers is encrypted.

- In 2015, a three-judge panel for the United States Court of Appeals for the Second Circuit ruled a key portion of the Patriot Act — Section 215, which allowed for bulk collection of Americans’ phone records — was illegal.

- WhatsApp was briefly shut down in Brazil after the company refused to comply with wiretaps mandated by a judge in São Paulo. Roughly 100 million Brazilians use the service. The judge’s order was followed several months later by the arrest of a Facebook executive in Brazil — WhatsApp is owned by Facebook — for failure to turn over information from a WhatsApp account linked to a criminal investigation.

- In April 2016, WhatsApp announced that the service had completed its end-to-end encryption rollout that it began nearly two years prior.

- The encryption algorithm was said to be stronger than anything currently used at that point, with a unique twist: the encrypted data would include a copy of the key used to encrypt it, which was held ‘in escrow’ by the federal government. All an agency like the NSA or FBI had to do was take the key out of escrow to encrypt the necessary information.

- In an op-ed published by the Washington Post last December, Blaze wrote, “Clipper failed not because the NSA was incompetent, but because designing a system with a backdoor was — and still is — fundamentally in conflict with basic security principles … For all of computer science’s great advances, we simply don’t know how to build secure, reliable software of this complexity.”

- PRISM was enacted in 2007, and enabled the NSA to collect Internet communications from upwards of nine U.S. companies. In 2013, President Barack Obama weighed in on the NSA’s data-collecting omnipotence, calling the agency’s position “a circumscribed, narrow system directed at us being able to protect our people.”

- One consequence of the hack was Amy Pascal losing her job as co-chairman of Sony Pictures Entertainment.

- About 21.5 million records, including Social Security numbers, are estimated to have been stolen.

- According to a 2015 Pew study, 40 percent of those surveyed believe that social media sites should not save data from their browsing history.

- On May 31, 2015, the most controversial aspects of Section 215 of the Patriot Act, which included the collection of phone records (among others) in bulk, expired.

- President Obama did not agree with the board’s decision, which was announced in January 2014: “I believe it is important that the capability that this program is designed to meet is preserved.”

- In July 2013, then-NSA chief General Keith Alexander alleged that intelligence from the agency’s various surveillance programs prevented 54 different ‘terrorist-related activities.’ That same year, though, Patrick Leahy, a Democratic Senator from Vermont, asked Alexander at a Senate Judiciary Committee hearing about the validity of his statement: “”Would you agree that the 54 cases that keep getting cited by the administration were not all plots, and of the 54, only 13 had some nexus to the U.S.?” To which Alexander simply replied, “Yes.”

- Called the Hemisphere Project, the New York Times reported in 2013 that the DEA, through the use of subpoenas, has access to all calls that passed through an AT&T switch (which doesn’t limit the calls to AT&T customers) for the past 26 years. This amounted to 4 billion call records daily.

- To Glenn Greenwald of the Guardian in 2013.

- Writing for the majority in Riley v. California, Chief Justice John Roberts wrote, “Opposition to such searches was in fact one of the driving forces behind the Revolution itself.”

- Soltani was hired as a senior advisor in the Office of Science and Technology Policy in late 2015. He was denied security clearance several months after his move was announced, prompting speculation that his reporting on Snowden and mass surveillance at the Washington Post had irked many within the intelligence community.