Eye Robot: Dyson’s Robo-Vacuum Cleans Floors, Revolutionizes Computer Vision

Finally, the Roomba has reason to fear. Last week, high-end vacuum-maker Dyson announced the 360 Eye, a robotic vacuum that...

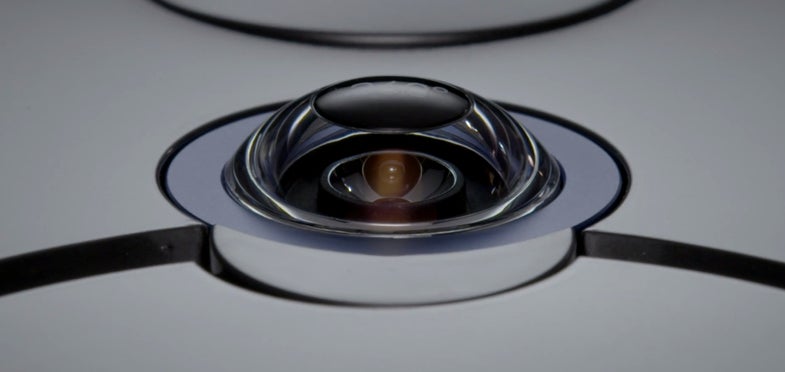

Finally, the Roomba has reason to fear. Last week, high-end vacuum-maker Dyson announced the 360 Eye, a robotic vacuum that navigates through the home using panoramic computer vision. Other cleaning bots have employed cameras, but never quite like this. As its name implies, the Eye sees in 360 degrees, keeping a constant watch on its surroundings as it moves, and using vision-based data to build a map. So while previous domestic robots may have trained their cameras at the ceiling, Dyson’s sees the floor before it, the furniture standing in its way, and even the framed photos hanging on the wall—its field of vision extends 45 degrees upwards, to capture more spatial landmarks. According to Dyson design engineer Nick Schneider, “One of the reasons we developed vision technology is because humans interact with the world primarily in a visual way. So if we built a system that could interact with the world using the same clues, we felt that system would have a significant advantage.”

The implication there is that computer vision imitates human perception, and borrows some of our species’ hard-won, highly evolved biomechanical efficiency. It’s a fine way of simplifying a robotic research goal, that’s not very accurate.

The 360 Eye doesn’t perceive the world anything like us. Humans are heavily reliant on vision, sure, but our hardware and software is entirely different from this robot’s, or that of any other robot to date. Where the Eye’s proprietary panoramic lens gathers light and projects it down onto a single CCD imaging sensor, gauging the relative distance of everything surrounding the bot, human vision is all about directional, stereoscopic sensors, and the ability to digest raw sensory input. Since our eyes generally come in forward-facing pairs, we get the benefits of depth perception, without having to bear the cognitive load that would come from having eyeballs arrayed around our entire head. That’s too much data for even the mighty human mind to reasonably handle. So that Dyson’s bot sees in a complete, unbroken circle makes it innately inhuman in its perception.

It also makes the 360 Eye a brilliant bit of robotics, and one of the most innovative strategies yet for the thorny problem of computer vision. Most automated systems designed to operate in what roboticists refer to as unstructured environments—where objects can suddenly wander into your path, and where there’s a lack of machine-readable barcodes, infrared reflectors or other breadcrumb trails—use vision as a supplementary sense, leaning instead on lasers, ultrasound, radar, or some combination of the three. Part of the reason for this is money. A single camera isn’t necessarily expensive, but loading up on lenses and sensors, in order to see a wide area, can start to be cost-prohibitive. The other, more technologically interesting stumbling block for computer vision is processing. What does a machine do with all of the photons its collecting?

This is a problem that most humans have well in hand, thanks to the gray matter sitting in our skulls. People are able to effortlessly pick out specific objects from visual noise, instantly classifying those objects, and coming up with a constantly updated matrix of meaning and consequence. We know, for example, that human beings don’t tend to collide with each other while walking, so the fellow approaching you in the hallway will probably get out the way. And you know this because you understand what eyes are, what a face is, and that this person’s eyes and face are aimed at you, and that there’s no steely glint of malice or insanity in his manner, that might indicate an imminent hockey check into the wall.

Robots know none of that. This lack of cognition—an artificial intelligence problem, more than a straight robotic one—tends to make computer vision far less useful than human vision, despite the best efforts of researchers.

I don’t mean to dismiss those efforts, by the way, some of which are worth noting. Rambus, a semiconductor maker based in California, is currently developing vision sensors that have no lens, and are, at 200 microns across, about twice as big as the diameter of a human hair. Since they’re little more than a plastic film, with no optical glass, these vision dots would be extremely durable (surviving hundreds of gees of mechanical force), remarkably cheap (about 10 cents each), and low-powered, sipping energy harvested from local sources, such as tiny solar panels. You could, in other words, stick them everywhere, studding the arms of robots to provide a better sense of limb position, embedding them in the back of a humanoid’s head to increase general awareness, or clustering them around more traditional cameras. Though, the resolution of the images that each dot captures is minuscule, they excel at recognizing patterns. As Rambus principal research scientist Patrick Gill points out, they could function like the smaller eyes that many spiders have. “They would notice when the robot should be aware of some change of motion, so it can focus a higher-resolution camera in that direction,” says Gill.

Though Rambus plans to get prototypes in the hands of developers and members of the maker community next year, these lensless sensors are still an unproven strategy for low-cost computer vision. On the other end of the spectrum is Hans Moravec, a pioneering roboticist who co-founded Seegrid Systems in 2003. Seegrid’s future is relatively uncertain—the company is currently mired in financial troubles—but its core technology has always been impressive. The Pittsburgh-based firm builds autonomous forklifts and cargo movers for industrial settings, based on Moravec’s work in 3D vision. The robots use an array of inexpensive consumer-grade image sensors to look in as many directions as possible, getting a broad visual sense of the environment. This visual sweep includes—and this is crucial—features that have nothing to do with avoiding collisions. Seegrid’s autonomous ground vehicles (AGVs) can note where a given air duct might be on a warehouse ceiling, in relation to a group of boxes stacked on top of a specific shelf. These robo-forklifts are building situational awareness, creating a map that includes things to avoid crashing into, along with things that simply function as landmarks. By referring back to these maps, they can move with more speed and confidence than a robot that’s constantly rebuilding its perception of what should be a familiar workspace.

Dyson’s approach also builds situational awareness, but does it with fewer cameras, and a much shorter memory. The 360 Eye seems with that single CCD sensor, and doesn’t store the maps it generates, so every cleaning run is a new exercise in making sense of the world around it. It’s less capable than a Seegrid bot, in other words. But that’s okay. Although Dyson hasn’t said how much the Eye will cost when it hits stores next year, it will certainly be less than the thousands of dollars that Seegrid charges for robotizing an existing industrial vehicle. Will Dyson’s robot vacuum be cost-competitive with iRobot’s line of Roombas, which range from $400 to $700? My guess is no—Dyson commands a premium among human-controlled vacuums, so expect a relative price hike for its first autonomous model. It will still, however, have to be affordable enough to attract consumers who already have a Roomba (or similar bot), and want an upgrade, or who’ve been waiting for a more impressive attempt at robotic chore reduction.

Granted, it’s a little presumptive to hail Dyson’s low-cost spin on computer vision months (or even a year) before the 360 Eye gets an actual price. In fact, Schneider wouldn’t provide many detailed specs for the current camera, such as its resolution, since hardware could easily change between now and the product’s release in 2015. But most of these details are relevant for next year’s robo-vacuum business story, meaning Dyson’s upcoming deathmatch with iRobot. For the wider field of robotics, the news here is an apparent breakthrough in cheap, effective computer vision, which could have implications beyond the niche category of automated vacuuming. Seeing is imperative for the classes of bot that consumers seem to want most. Machines that are able to drive, clean, care for the elderly, or anything else that requires being in close proximity to people, can benefit from more useful eyes. Or, as it were, one all-seeing cyclopean eye.

Another reason to care about Dyson’s first robot: it probably won’t be Dyson’s last. Schneider couldn’t share specifics, but confirmed that the company isn’t licensing out its computer vision technology, and is continuing to explore the in-house development of other types of robots. “The vision system makes that a definite possibility,” says Schneider. “Stay tuned.”