Targeted Video Compression Brings Cell Phones to Sign Language Users

Most people use the video camera on their phone for bootlegging concert footage or recording drunken antics. But for the...

Most people use the video camera on their phone for bootlegging concert footage or recording drunken antics. But for the deaf, to whom cellphones’ audio capability is moot, cellphone video offers a chance to expand beyond texting, and into the more expressive communication of American Sign Language. Unfortunately, low-bandwidth American cellphone lines can’t carry video clear enough for sign language.

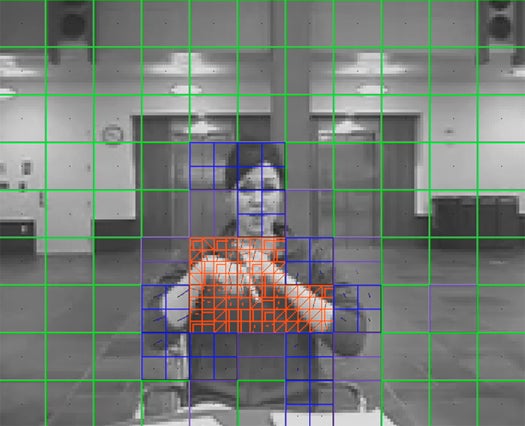

That’s where MobileASL comes in. This multi-university project developed a special algorithm that selectively compresses the video, lowering the resolution on everything but the speakers hands. This lowers the size of the video to the point where it can pass over regular cellphone signals. And now, the MobileASL project has developed the first prototype phone that incorporates this technology.

The algorithm uses skin-tone sensing and motion tracking to separate the hands from the rest of the image. Once the hands are identified, the phone’s computer dials the fidelity of the rest of the image way down, while increasing the sharpness of the speaker’s hands. The resulting video has hands clear enough for ASL users to understand, but a total size small enough for American cellphone carriers to handle.

European and Asian users have utilized their phones for sign language for years, since their high-speed cellphone networks can handle uncompressed video featuring clear hands without the help of a fancy new program. Now Americans can join the party.

For more on how MobileASL works, and for a shot of the prototype device, check out this video: