Oxford Institute Forecasts The Possible Doom Of Humanity

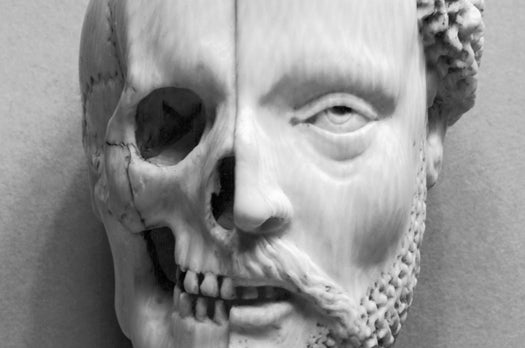

What's the greatest threat to our species' continued existence? Take a look in the mirror.

Most of us are content to just worry about the future of humanity in our spare time, but there’s an entire group of academics at Oxford University in England who make that their professional mission.

Each member of the Future of Humanity Institute has his own focus. Some are concerned with climate change and its impact on humanity; others with the future of human cognition. Department head Nick Bostrom, whose paper Existential Risk Prevention As Global Priority has just been published, has a long history of being worried about our future as a species. Bostrom posits that humanity is the greatest threat to humanity’s survival.

Bostrom’s paper is concerned with a particular time-scale: Can humanity survive the next century? This rules out some of the more unlikely natural scenarios that could snuff out humans in the more distant future: supervolcanoes, asteroid impacts, gamma-ray bursts and the like. The chances of one of those happening within the very narrow timeframe involved is, according to the paper, extremely small. Further, most other natural disasters, such as a pandemic, are unlikely to kill all humans; we as a species have survived many pandemics and will likely do so in the future.

The Personal

According to Bostrom, the types of civilization-ending disasters we may unleash upon ourselves include nuclear holocausts, badly programmed superintelligent entities and, my personal favorite, “we are living in a simulation and it gets shut down.” (As an aside, how the hell do you prepare for that eventuality?) Additionally, humans face four different categories of existential risk:

Extinction: we off ourselves before we reach technological maturity

Stagnation: we stay mired in our current technological and intellectual backwater

Flawed realization: we advance technologically…in a way that isn’t sustainable

Subsequent ruination: we reach sustainable technological maturity and then eff it all up anyway

More pointedly, Bostrom’s paper is a renewal of a call-to-arms he issued a decade ago imploring people to wake up to the possibility that we will kill ourselves with technology. These days, he’s not so much concerned with the how — existential death by grey goo vs existential death by sentient robots is still existential death. He’s most concerned that there’s nobody out there really doing anything about this problem. That’s understandable, of course. Existential threats are nebulous concepts, and even the threat of nuclear winter was not enough to terrify certain governments into, you know, not building thermonuclear weapons.

Bostrom, of course, can’t help but come off a bit high-handed when he laments that there are far too many papers in the academic literature on “less-important topics,” such as “dung beetles” and “snowboarding.” This is amusingly wrong-headed. If I’ve learned anything at all during my stint on this planet, it is that if a topic exists, somebody will make it their life’s work to ask questions about it.

Like every SF fan out there, I love a good dystopian narrative, disaster scenario, and potential civilization-ending cataclysm. It makes for interesting drama and the exploration of the ethical implications of certain actions. If Bostrom and his team wish to kick-start a juggernaut of end-times scientific research by hiring smart young research assistants to help solve some of these problems, then I applaud their efforts and look forward to reading their scenarios. Let’s hope that next time they can leave the pointless criticism of other legitimate avenues of research out of it.