Meet A Woman Who Trains Robots For A Living

Teaching the art of learning through algorithms and pasta

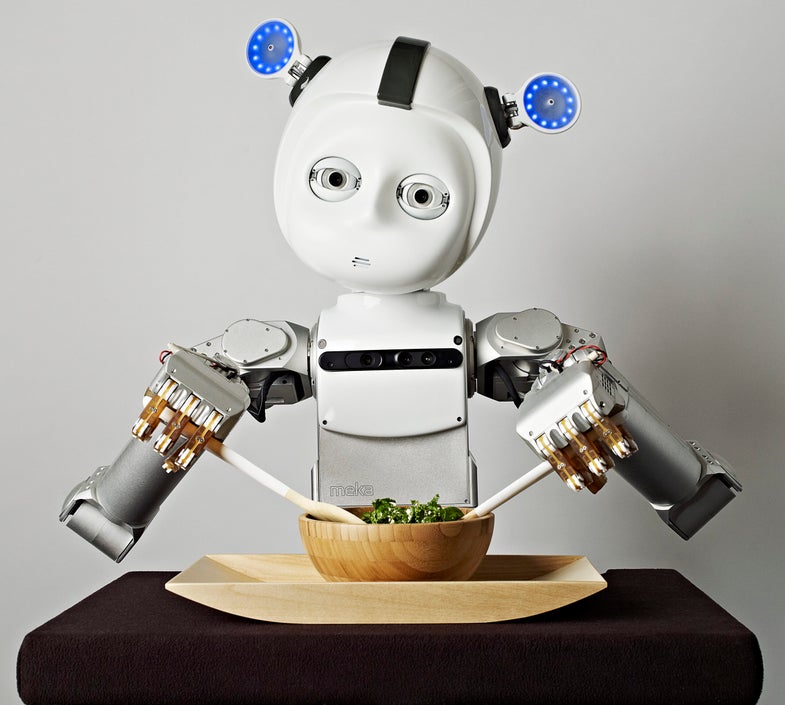

Andrea Thomaz, who directs Georgia Tech’s Socially Intelligent Machines Lab, teaches a one-of-a-kind student: a robot with light-up ears, named Curi. We asked Thomaz how she envisions life with robots unfolding.

Popular Science: Why should robots be able to learn?

Andrea Thomaz: Personal robots are going to be out in human environments, and it’s going to be really hard for engineers to think of all the things we’re going to want those robots to do. So my lab is trying to enable end users to teach robots. We’re thinking about different elements, from the interaction itself—how should the robot phrase questions so that it’s gathering the right kind of information?—to machine learning, or algorithms to deal with the kind of input people will provide.

PS: Can you describe a typical interaction with Curi in the lab?

AT: We’re teaching Curi to help out in the kitchen—scoop some pasta from a pot onto a plate and serve the sauce on top. One way to do that is by physically showing the robot. You say, “Here, Curi, let me teach you how to scoop the pasta.” And you kind of drag the robot’s arm through the motion. Then you ask, “Can you show me what you learned?” and the robot will try to repeat the task, or it could ask questions like, “At this point, is it important that my hand is positioned like this?” in order to build a better model.

Andrea Thomaz

PS: Are you also watching how people interact with the robot?

AT: Absolutely. Usually, with a little bit of practice, people can do whatever interaction [with Curi] we’ve designed. At the end of that, we ask open-ended questions about how they wish they could have interacted with the robot. People usually say they would like to be able to watch the robot try to do the learned task and then say, “good job,” or give positive or negative feedback. Some algorithms could make use of that. So that’s one thing we look at: What kinds of input do people naturally want to provide and how do we design algorithms that fit that?

PS: Is the pasta task one way you envision robots being part of our lives?

AT: I think we could start seeing personal robots in all parts of our life. Those that are envisioned most at robotics conferences are assistive robots, educational robots, and robots that can help in hospitals. When we ask people in our lab, the thing they want most is Rosie the Robot—a robot to clean their house and do the stuff they don’t want to do.

PS: In the movie Robot & Frank, a robot acts as a caregiver for an elderly man. Just how sci-fi is that scenario?

AT: For a lot of people in the community of human-robot interaction, that is the target—getting robots to a low enough price point that somebody could purchase one to be in their home and do something useful. We’re definitely not there yet, but we’re going to get there. The part of Robot & Frank that matches the vision I have for my lab is that the robot is adaptable. It’s kind of terrible that the owner teaches the robot how to steal jewelry, but he was able to define what he wants the robot to do and train it. It’s collaborative. That, I think, is going to have to be some part of the end-user interaction.

This article originally appeared alongside “Will Your Next Best Friend Be A Robot?” in the November 2014 issue of Popular Science.